Affective Computing

The player's emotional reactions to in-game events, generated content, or suggestions of a computational co-creator are paramount to the players' enjoyment of a game as well as to designers' creativity. I have looked into computational models of affect, such as player frustration, tension, arousal and others.

Affect in Spatial Navigation: A Study of Rooms

Emmanouil Xylakis, Antonios Liapis, Georgios N. Yannakakis

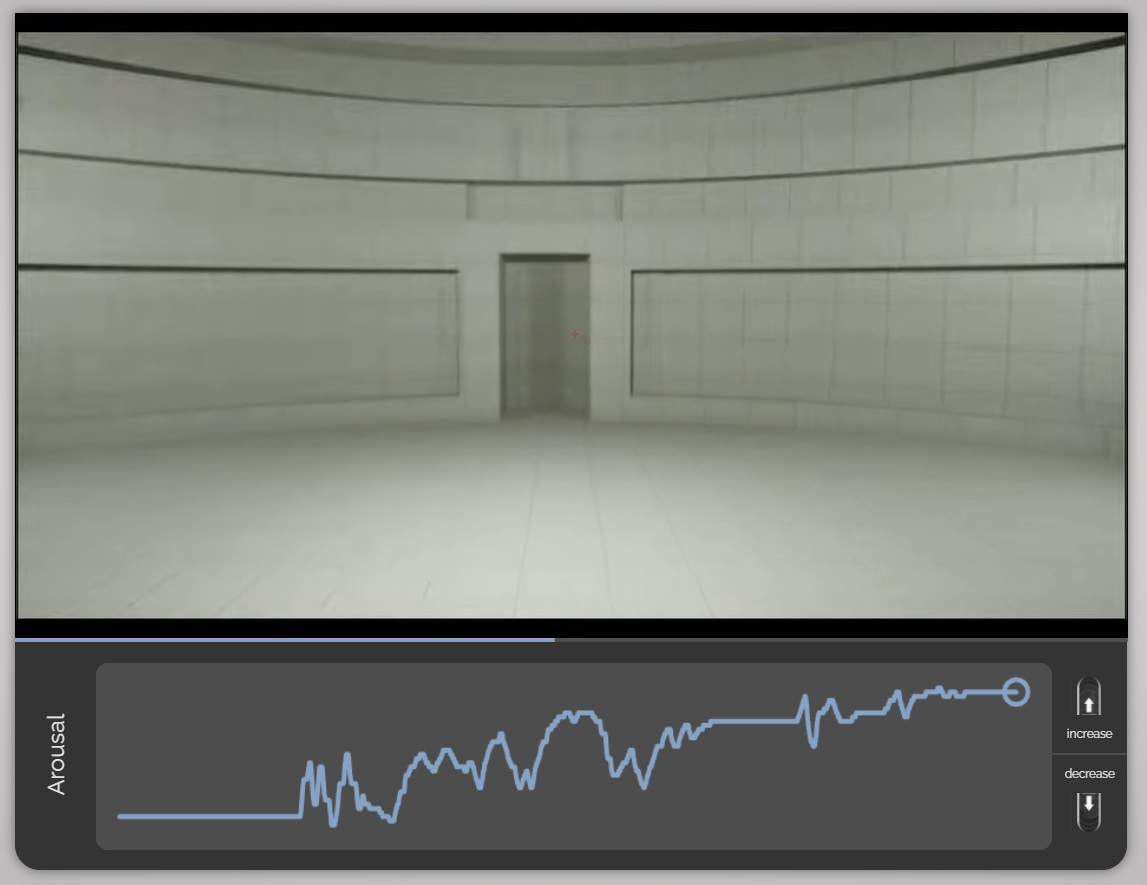

Screenshot of PAGAN using the RankTrace tool during an arousal annotation task: navigation video (top) and continuous arousal annotation (bottom).

Abstract: How do spaces make us feel? What is the perceived emotional impact of built form? This study proposes a framework to identify and model the effects that our perceived environment can have by taking into consideration illumination and structural form while acknowledging its temporal dimension. To study this, we recruited 100 participants via a crowd-sourcing platform in order to annotate their perceived arousal or pleasure shifts while watching videos depicting spatial navigation in first person view. Participants' annotations were recorded as time-continuous unbounded traces, allowing us to extract ordinal labels about how their arousal or pleasure fluctuated as the camera moved between different rooms. Given the subjective nature of the task and the noisy signals from real-time annotation, a number of processing steps are applied in order to convert the data into ordinal relationships between affect metrics in different rooms. Experiments with random forests and other classifiers show that, with the right treatment and data cleanup, simple interior design features can be adequate predictors of human arousal and pleasure changes over time. The dataset is made available in order to prompt exploration of additional modalities as input and ground truth extraction.

in IEEE Transactions on Affective Computing 16(2), 2025. BibTex

GameVibe: a multimodal affective game corpus

Matthew Barthet, Maria Kaselimi, Kosmas Pinitas, Konstantinos Makantasis, Antonios Liapis, Georgios N. Yannakakis

Screenshots from the 30 different first person shooter games in the GameVibe corpus. Short videos of each game are annotated for viewer engagement in a time-continuous trace by five annotators.

Abstract: As online video and streaming platforms continue to grow, affective computing research has undergone a shift towards more complex studies involving multiple modalities. However, there is still a lack of readily available datasets with high-quality audiovisual stimuli. In this paper, we present GameVibe, a novel affect corpus which consists of multimodal audiovisual stimuli, including in-game behavioural observations and third-person affect traces for viewer engagement. The corpus consists of videos from a diverse set of publicly available gameplay sessions across 30 games, with particular attention to ensure high-quality stimuli with good audiovisual and gameplay diversity. Furthermore, we present an analysis on the reliability of the annotators in terms of inter-annotator agreement.

in Scientific Data 11, 2024. BibTex

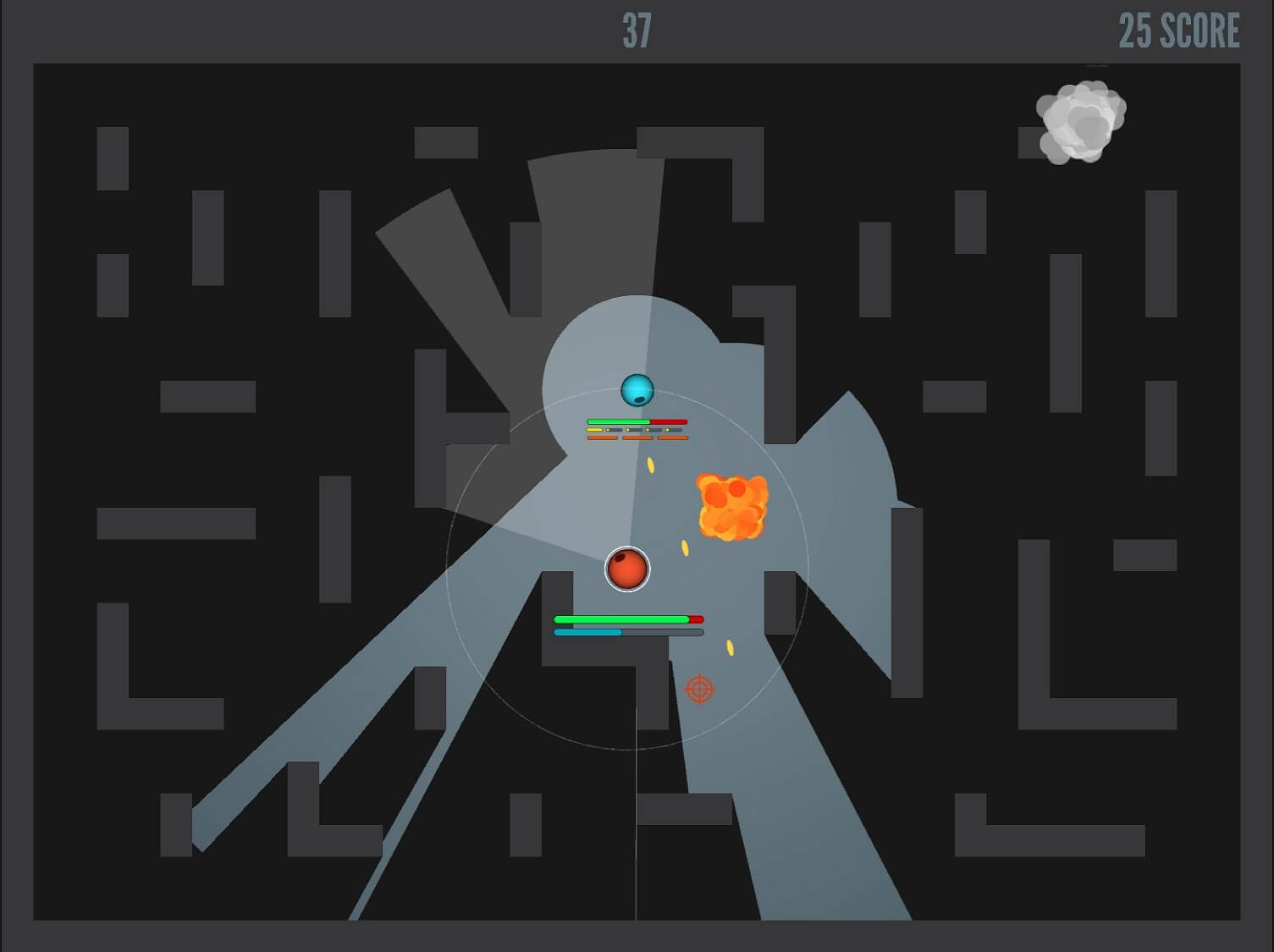

Affectively Framework: Towards Human-like Affect-Based Agents

Matthew Barthet, Roberto Gallotta, Ahmed Khalifa, Antonios Liapis, Georgios N. Yannakakis

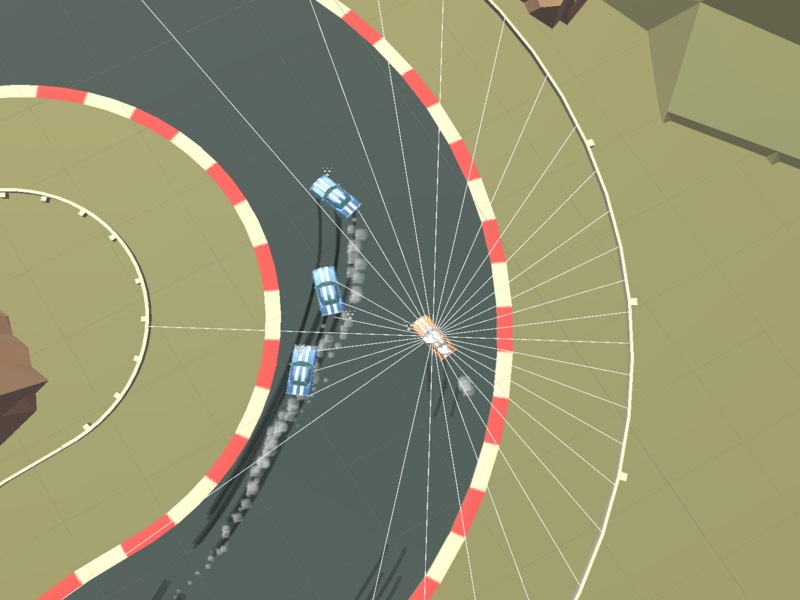

A visualization of the observation state for Solid Rally, one of the three game environments included in the Affectively Framework.

Abstract: Game environments offer a unique opportunity for training virtual agents due to their interactive nature, which provides diverse play traces and affect labels. Despite their potential, no reinforcement learning framework incorporates human affect models as part of their observation space or reward mechanism. To address this, we present the Affectively Framework, a set of Open-AI Gym environments that integrate affect as part of the observation space. This paper introduces the framework and its three game environments and provides baseline experiments to validate its effectiveness and potential.

in Proceedings of the International Conference on Affective Computing and Intelligent Interaction Workshops and Demos, 2024. BibTex

The Scream Stream: Multimodal Affect Analysis of Horror Game Spaces

Emmanouil Xylakis, Antonios Liapis, Georgios N. Yannakakis

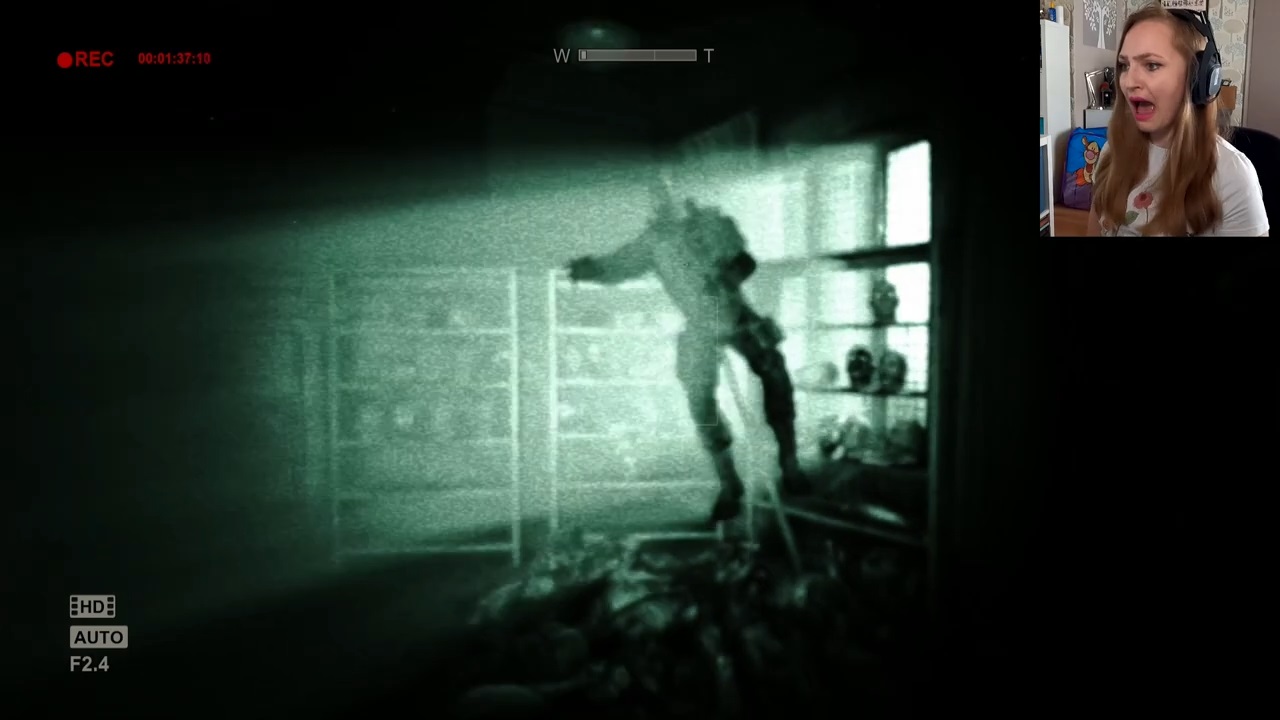

One of the many jump scares of the Outlast Asylum Affect Corpus, depicting in-game visuals and face cam of YouTube user AnidaGaming. Image used with the streamer's permission.

Abstract: Virtual environments allow us to study the impact of space on the emotional patterns of a user as they navigate through it. Similarly, digital games are capable of eliciting intense emotional responses from their players; moreso when the game is explicitly designed to do this, as in the Horror game genre. A growing body of literature has already explored the relationship between varying virtual space contexts and user emotion manifestation in horror games, often relying on physiological data or self-reports. In this paper, instead, we study players' emotion manifestations within this game genre. Specifically, we analyse facial expressions, voice signals, and verbal narration of YouTube streamers while playing the Horror game Outlast. We document the collection of the Outlast Asylum Affect corpus from in-the-wild videos, and its analysis into three different affect streams based on the streamer's speech and face camera data. These affect streams are juxtaposed with manually labelled gameplay and spatial transitions during the streamer's exploration of the virtual space of the Asylum map of the Outlast game. Results in terms of linear and non-linear relationships between captured emotions and the labelled features demonstrate the importance of a gameplay context when matching affect to level design parameters. This study is the first to leverage state-of-the-art pre-trained models to derive affect from streamers' facial expressions, voice levels, and utterances and opens up exciting avenues for future applications that treat streamers' affect manifestations as in-the-wild affect corpora.

in Proceedings of the International Conference on Affective Computing and Intelligent Interaction Workshops and Demos, 2024. BibTex

Varying the Context to Advance Affect Modelling: A Study on Game Engagement Prediction

Kosmas Pinitas, Nemanja Rasajski, Matthew Barthet, Maria Kaselimi, Konstantinos Makantasis, Antonios Liapis, Georgios N. Yannakakis

The GameVibe corpus contains 2 hours of gameplay footage annotated for viewer engagement. The corpus includes footage from 30 First Person Shooter games between 1992 and 2023, with varied game modes and audiovisual styles.

Abstract: Affective computing faces a pressing challenge: the limited ability of affect models to generalise amidst varying contextual factors within the same task. While well recognised, this challenge persists due to the absence of suitable large-scale corpora with rich and diverse contextual information within a domain. To address this challenge, this paper introduces a GameVibe, a novel corpus explicitly tailored to confront the lack of contextual diversity. The affect corpus is sourced from 30 First Person Shooter (FPS) games, showcasing diverse game modes and designs within the same domain. The corpus comprises 2 hours of annotated gameplay videos with engagement levels annotated by a total of 20 participants in a time-continuous manner. Our preliminary analysis on this corpus sheds light on the complexity of generalising affect predictions across contextual variations in similar affective computing tasks. These initial findings serve as a catalyst for further research, inspiring deeper inquiries into this critical, yet understudied, aspect of affect modelling.

in Proceedings of the International Conference on Affective Computing and Intelligent Interaction, 2024. BibTex

Predicting Player Engagement in Tom Clancy's The Division 2: A Multimodal Approach via Pixels and Gamepad Actions

Kosmas Pinitas, David Renaudie, Mike Thomsen, Matthew Barthet, Konstantinos Makantasis, Antonios Liapis, Georgios N. Yannakakis

Data collection snapshot for The Division 2. Annotated videos contain timestamps for data synchronisation, statistics about compute resources, the ID of the workstation, the face of the participant (blurred out), eye-tracking data, and the live input on their gamepad. The bottom of the layout visualises the participant's engagement annotation trace using the PAGAN annotation tool.

Abstract: This paper introduces a large scale multimodal corpus collected for the purpose of analysing and predicting player engagement in commercial-standard games. The corpus is solicited from 25 players of the action role-playing game Tom Clancy's The Division 2, who annotated their level of engagement using a time-continuous annotation tool. The cleaned and processed corpus presented in this paper consists of nearly 20 hours of annotated gameplay videos accompanied by logged gamepad actions. We report preliminary results on predicting long-term player engagement based on in-game footage and game controller actions using Convolutional Neural Network architectures. Results obtained suggest we can predict the player engagement with up to 72% accuracy on average (88% at best) when we fuse information from the game footage and the player's controller input. Our findings validate the hypothesis that long-term (i.e. 1 hour of play) engagement can be predicted efficiently solely from pixels and gamepad actions.

in Proceedings of the 25th ACM International Conference on Multimodal Interaction, 2023. BibTex

Knowing Your Annotator: Rapidly Testing the Reliability of Affect Annotation

Matthew Barthet, Chintan Trivedi, Kosmas Pinitas, Emmanouil Xylakis, Konstantinos Makantasis, Antonios Liapis, Georgios N. Yannakakis

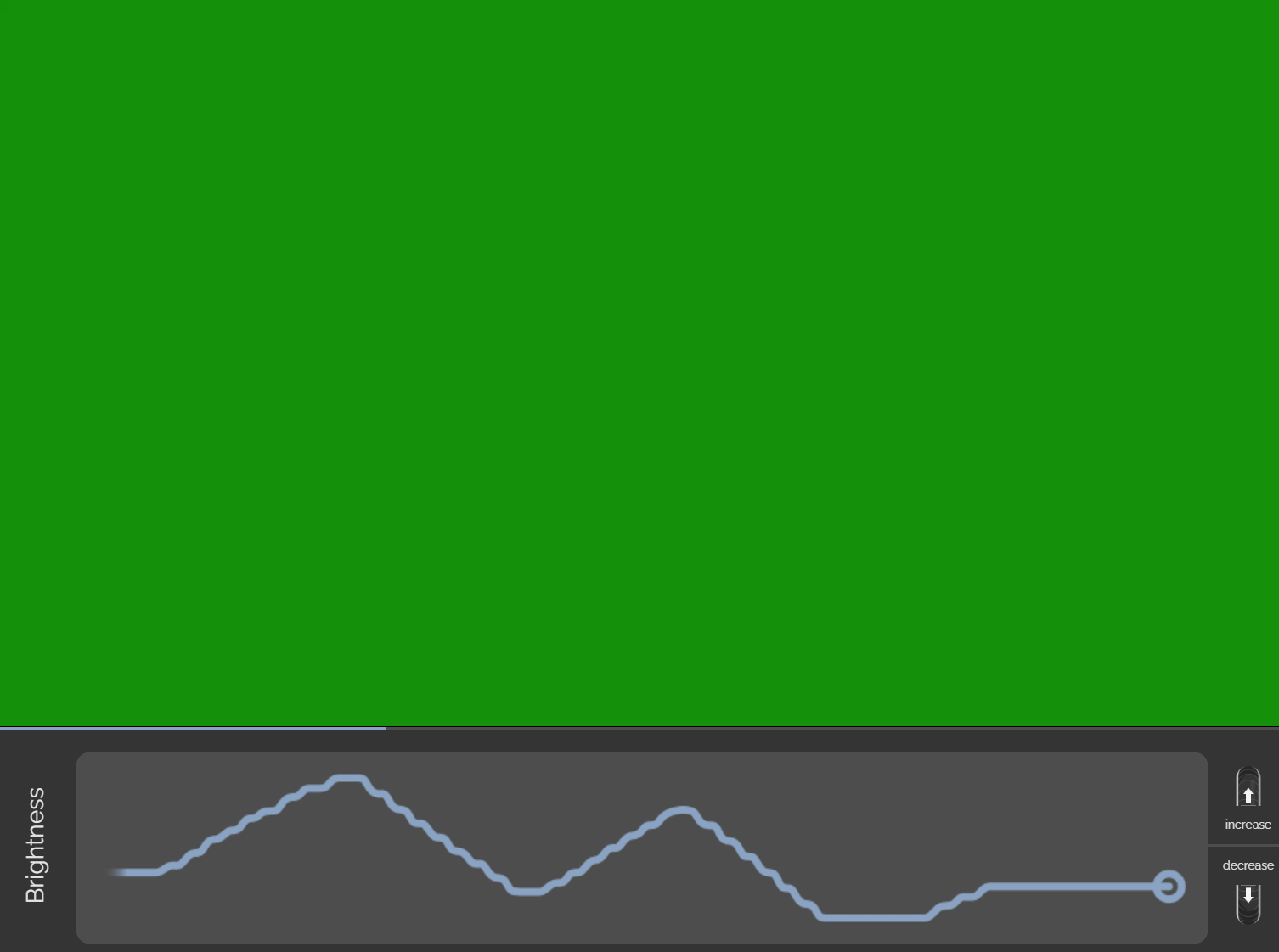

Through a simple Quality Assurance task, we benchmark the annotators' ability to use the RankTrace tool. The shown visual Quality Assurance (QA) task offers a known ground truth (as fluctuation of a green image's brightness in a custom-made video) and allows us to match it to the user annotation. These QA tasks allow us to identify problematic annotators and filter them out in affect annotation tasks where the ground truth (player engagement) is unknown and subjective.

Abstract: The laborious and costly nature of affect annotation is a key detrimental factor for obtaining large scale corpora with valid and reliable affect labels. Motivated by the lack of tools that can effectively determine an annotator's reliability, this paper proposes general quality assurance (QA) tests for real-time continuous annotation tasks. Assuming that the annotation tasks rely on stimuli with audiovisual components, such as videos, we propose and evaluate two QA tests: a visual and an auditory QA test. We validate the QA tool across 20 annotators that are asked to go through the test followed by a lengthy task of annotating the engagement of gameplay videos. Our findings suggest that the proposed QA tool reveals, unsurprisingly, that trained annotators are more reliable than the best of untrained crowdworkers we could employ. Importantly, the QA tool introduced can predict effectively the reliability of an affect annotator with 80% accuracy, thereby, saving on resources, effort and cost, and maximizing the reliability of labels solicited in affective corpora. The introduced QA tool is available and accessible through the PAGAN annotation platform.

in Proceedings of the 11th International Conference on Affective Computing and Intelligent Interaction Workshops and Demos (ACIIW), 2023. BibTex

Eliciting and Annotating Emotion in Virtual Spaces

Emmanouil Xylakis, Ali Najm, Despina Michael-Grigoriou, Antonios Liapis and Georgios N. Yannakakis

Snapshots of different parrticipants testing the elicitation/annotation protocol in VR using a head-mounted display (left) or on the desktop using the traditional screen. In both cases, the annotation of pleasure was done through a computer mouse.

Abstract: We propose an online methodology where moment-to-moment affect annotations are gathered while exploring and visually interacting with virtual environments. For this task we developed an application to support this methodology, targeting both a VR and a desktop experience, and conducted a study to evaluate these two media of display. Results show that in terms of usability, both experiences were perceived equally positive. Presence was rated significantly higher for the VR experience, while participant ratings indicated a tendency for medium distraction during the annotation process. Additionally, effects between the architectural design elements were identified with perceived pleasure. The strengths and limitations of the proposed approach are highlighted to ground further work in gathering affect data in immersive and interactive media within the context of architectural appraisal.

in Proceedings of the 41st Education and Research in Computer Aided Architectural Design in Europe (eCAADe) Conference, 2023. BibTex

From the Lab to the Wild: Affect Modeling via Privileged Information

Konstantinos Makantasis, Kosmas Pinitas, Antonios Liapis and Georgios N. Yannakakis

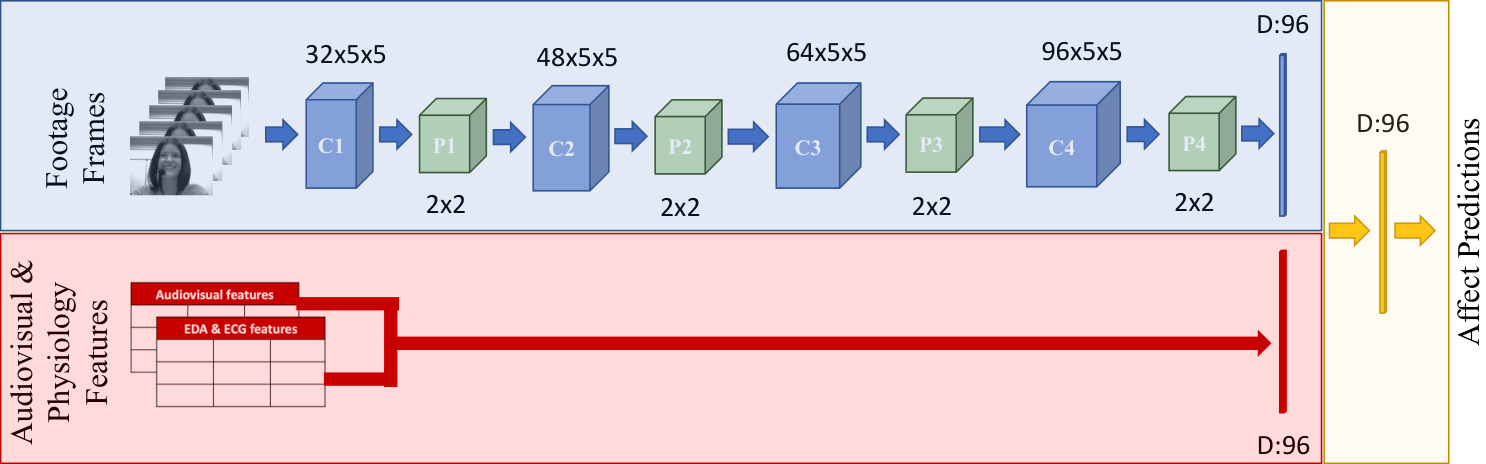

Architecture of the employed models of affect. The blue-shaded stream is based on webcam footage encoded into frames, and constitutes non-privileged information that may be available in the wild. The red stream and yellow-shaded module fuse information from other modalities (e.g. physiology, processed audio metrics) that we treat as privileged; these modalities may not be available or may require specialized software to produce in the wild. The transfer of knowledge in this paper is achieved by feeding the model with only those modalities on information that is available in the wild and force it during training to balance between the learning task's loss and learning latent representations that match those of the teacher model which is trained on privileged information.

Abstract: How can we reliably transfer affect models trained in controlled laboratory conditions (in-vitro) to uncontrolled real-world settings (in-vivo)? The information gap between in-vitro and in-vivo applications defines a core challenge of affective computing. This gap is caused by limitations related to affect sensing including intrusiveness, hardware malfunctions and availability of sensors. As a response to these limitations, we introduce the concept of privileged information for operating affect models in real-world scenarios (in the wild). Privileged information enables affect models to be trained across multiple modalities available in a lab, and ignore, without significant performance drops, those modalities that are not available when they operate in the wild. Our approach is tested in two multimodal affect databases one of which is designed for testing models of affect in the wild. By training our affect models using all modalities and then using solely raw footage frames for testing the models, we reach the performance of models that fuse all available modalities for both training and testing. The results are robust across both classification and regression affect modeling tasks which are dominant paradigms in affective computing. Our findings make a decisive step towards realizing affect interaction in the wild.

in IEEE Transactions on Affective Computing 15(2), 2024. BibTex

Multiplayer Tension In the Wild: A Hearthstone Case

Paris Mavromoustakos-Blom, David Melhart, Antonios Liapis, Georgios N. Yannakakis, Sander Bakkes and Pieter Spronck

Snapshot of a video recorded during a Hearthstone competition, while it is being annotated in terms of the player's tension. In the video recording, the webcam feed is captured at the bottom left and game screen is captured at the top right of the recording video file.

Abstract: Games are designed to elicit strong emotions during game play, especially when players are competing against each other. Artificial Intelligence applied to predict a player's emotions has mainly been tested on single-player experiences in low-stakes settings and short-term interactions. How do players experience and manifest affect in high-stakes competitions, and which modalities can capture this? This paper reports a first experiment in this line of research, using a competition of the video game Hearthstone where both competing players' game play and facial expressions were recorded over the course of the entire match which could span up to 41 minutes. Using two experts' annotations of tension using a continuous video affect annotation tool, we attempt to predict tension from the webcam footage of the players alone. Treating both the input and the tension output in a relative fashion, our best models reach 66.3% average accuracy (up to 79.2% at the best fold) in the challenging leave- one-participant out cross-validation task. This initial experiment shows a way forward for affect annotation in games "in the wild" in high-stakes, real-world competitive settings.

in Proceedings of the International Conference on the Foundations of Digital Games, 2023. BibTex

The Pixels and Sounds of Emotion: General-Purpose Representations of Arousal in Games

Konstantinos Makantasis, Antonios Liapis and Georgios N. Yannakakis

Screenshots of the four tested games, and the activation map of the classifier trained to predict high or low arousal. Warmer colors in the activation maps show which areas of the screen most impact the prediction of high arousal.

Abstract: What if emotion could be captured in a general and subject-agnostic fashion? Is it possible, for instance, to design general-purpose representations that detect affect solely from the pixels and audio of a human-computer interaction video? In this paper we address the above questions by evaluating the capacity of deep learned representations to predict affect by relying only on audiovisual information of videos. We assume that the pixels and audio of an interactive session embed the necessary information required to detect affect. We test our hypothesis in the domain of digital games and evaluate the degree to which deep classifiers and deep preference learning algorithms can learn to predict the arousal of players based only on the video footage of their gameplay. Our results from four dissimilar games suggest that general-purpose representations can be built across games as the arousal models obtain average accuracies as high as 85% using the challenging leave-one-video-out cross-validation scheme. The dissimilar audiovisual characteristics of the tested games showcase the strengths and limitations of the proposed method.

in IEEE Transactions on Affective Computing 14(1), 2023. BibTex

The Invariant Ground Truth of Affect

Konstantinos Makantasis, Kosmas Pinitas, Antonios Liapis and Georgios N. Yannakakis

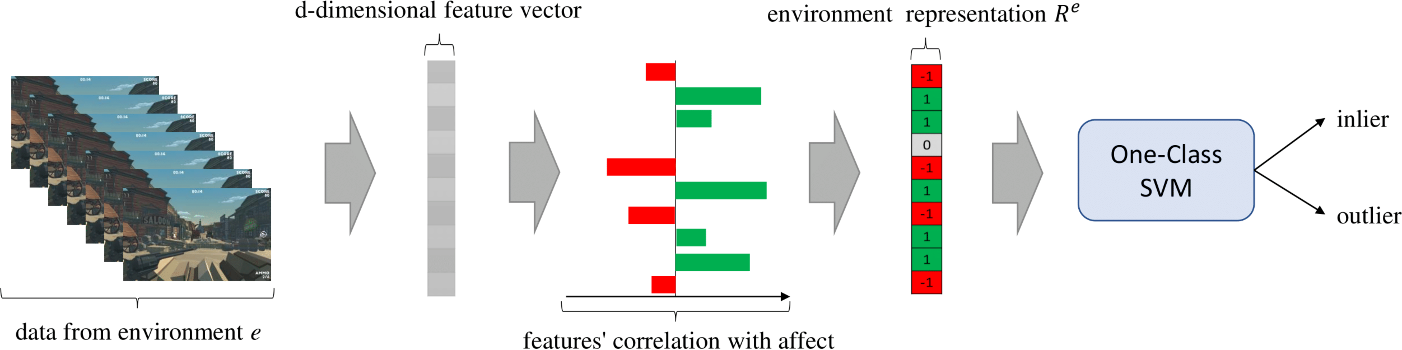

The proposed overall methodology for detecting outlier environments based on correlation coefficients and One-Class SVMs.

Abstract: Affective computing strives to unveil the unknown relationship between affect elicitation, manifestation of affect and affect annotations. The ground truth of affect, however, is predominately attributed to the affect labels which inadvertently include biases inherent to the subjective nature of emotion and its labeling. The response to such limitations is usually augmenting the dataset with more annotations per data point; however, this is not possible when we are interested in self-reports via first-person annotation. Moreover, outlier detection methods based on inter-annotator agreement only consider the annotations themselves and ignore the context and the corresponding affect manifestation. This paper reframes the ways one may obtain a reliable ground truth of affect by transferring aspects of causation theory to affective computing. In particular, we assume that the ground truth of affect can be found in the causal relationships between elicitation, manifestation and annotation that remain invariant across tasks and participants. To test our assumption we employ causation inspired methods for detecting outliers in affective corpora and building affect models that are robust across participants and tasks. We validate our methodology within the domain of digital games, with experimental results showing that it can successfully detect outliers and boost the accuracy of affect models. To the best of our knowledge, this study presents the first attempt to integrate causation tools in affective computing, making a crucial and decisive step towards general affect modeling.

in Proceedings of the ACII Workshop on What's Next in Affect Modeling?, 2022. BibTex

Supervised Contrastive Learning for Affect Modelling

Kosmas Pinitas, Konstantinos Makantasis, Antonios Liapis and Georgios N. Yannakakis

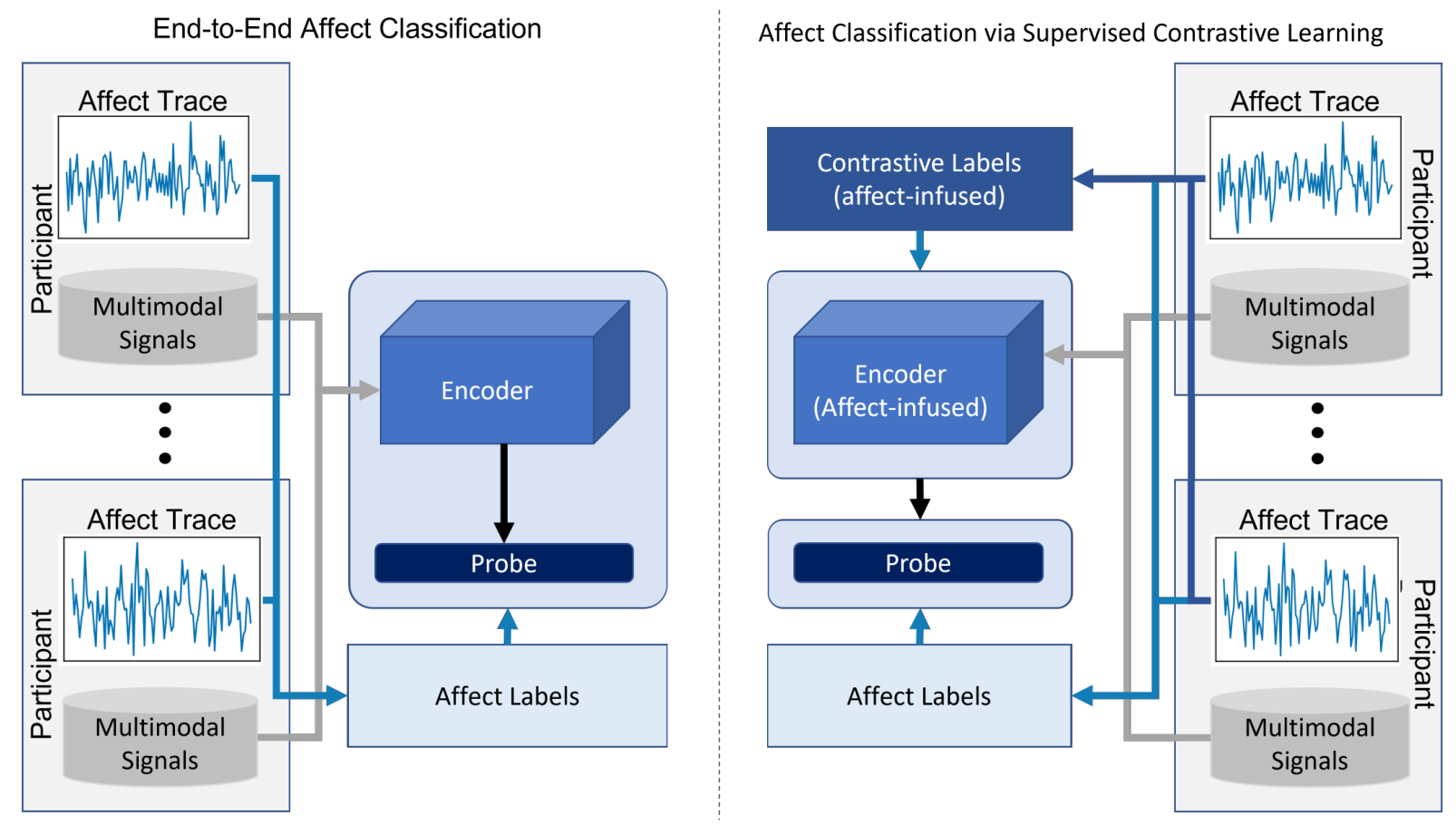

Traditional end-to-end classification baseline (left) compared to the supervised contrastive learning method (right) which first derives affect-infused labels for contrastive learning, and then trains the probe model based on the affect representations of the pre-trained encoder. In both learning paradigms, affect labels are derived from participants' annotations.

Abstract: Affect modeling is viewed, traditionally, as the process of mapping measurable affect manifestations from multiple modalities of user input to affect labels. That mapping is usually inferred through end-to-end (manifestation-to-affect) machine learning processes. What if, instead, one trains general, subject-invariant representations that consider affect information and then uses such representations to model affect? In this paper we assume that affect labels form an integral part, and not just the training signal, of an affect representation and we explore how the recent paradigm of contrastive learning can be employed to discover general high-level affect-infused representations for the purpose of modeling affect. We introduce three different supervised contrastive learning approaches for training representations that consider affect information. In this initial study we test the proposed methods for arousal prediction in the RECOLA dataset based on user information from multiple modalities. Results demonstrate the representation capacity of contrastive learning and its efficiency in boosting the accuracy of affect models. Beyond their evidenced higher performance compared to end-to-end arousal classification, the resulting representations are general-purpose and subject-agnostic, as training is guided though general affect information available in any multimodal corpus.

in Proceedings of the International Conference on Multimodal Interfaces, 2022. BibTex

Play with Emotion: Affect-Driven Reinforcement Learning

Matthew Barthet, Ahmed Khalifa, Antonios Liapis and Georgios N. Yannakakis

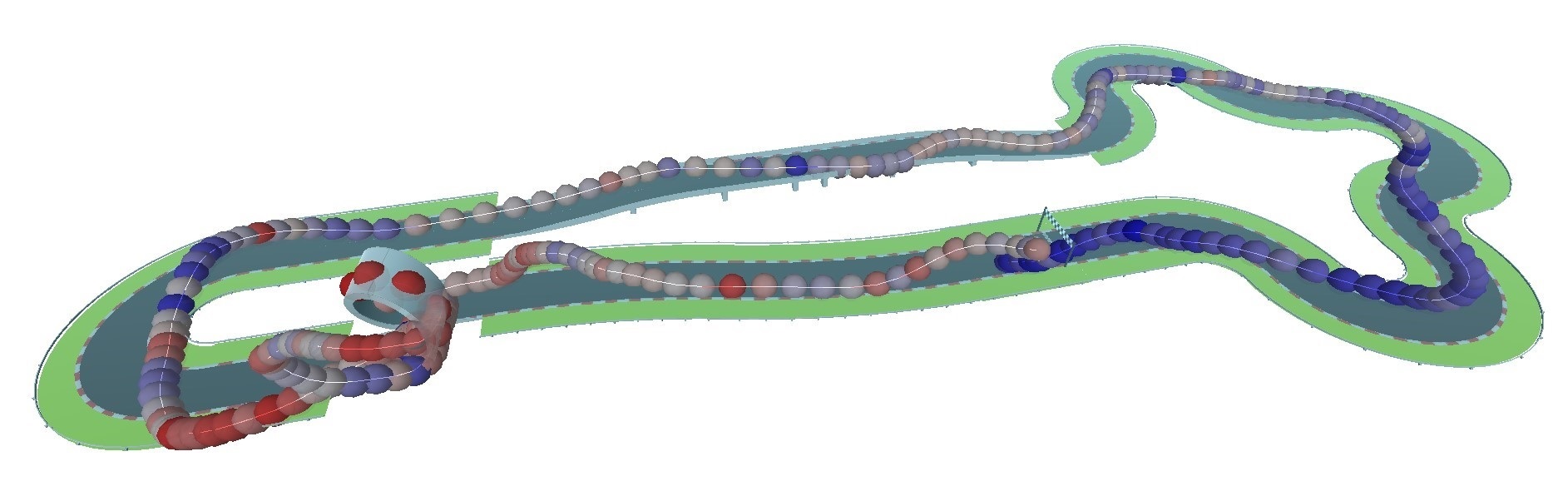

The best playthroughs of a trained agent to maximize affect confidence. Spheres indicate the player's position during the first lap, with red and blue spheres indicating high and low predicted arousal respectively.

Abstract: This paper introduces a paradigm shift by viewing the task of affect modeling as a reinforcement learning (RL) process. According to the proposed paradigm, RL agents learn a policy (i.e. affective interaction) by attempting to maximize a set of rewards (i.e. behavioral and affective patterns) via their experience with their environment (i.e. context). Our hypothesis is that RL is an effective paradigm for interweaving affect elicitation and manifestation with behavioral and affective demonstrations. Importantly, our second hypothesis - building on Damasio's somatic marker hypothesis - is that emotion can be the facilitator of decision-making. We test our hypotheses in a racing game by training Go-Blend agents to model human demonstrations of arousal and behavior; Go-Blend is a modified version of the Go-Explore algorithm which has recently showcased supreme performance in hard exploration tasks. We first vary the arousal-based reward function and observe agents that can effectively display a palette of affect and behavioral patterns according to the specified reward. Then we use arousal-based state selection mechanisms in order to bias the strategies that Go-Blend explores. Our findings suggest that Go-Blend not only is an efficient affect modeling paradigm but, more importantly, affect-driven RL improves exploration and yields higher performing agents, validating Damasio's hypothesis in the domain of games.

in Proceedings of the International Conference on Affective Computing and Intelligent Interaction, 2022. BibTex

Generative Personas That Behave and Experience Like Humans

Matthew Barthet, Ahmed Khalifa, Antonios Liapis and Georgios N. Yannakakis

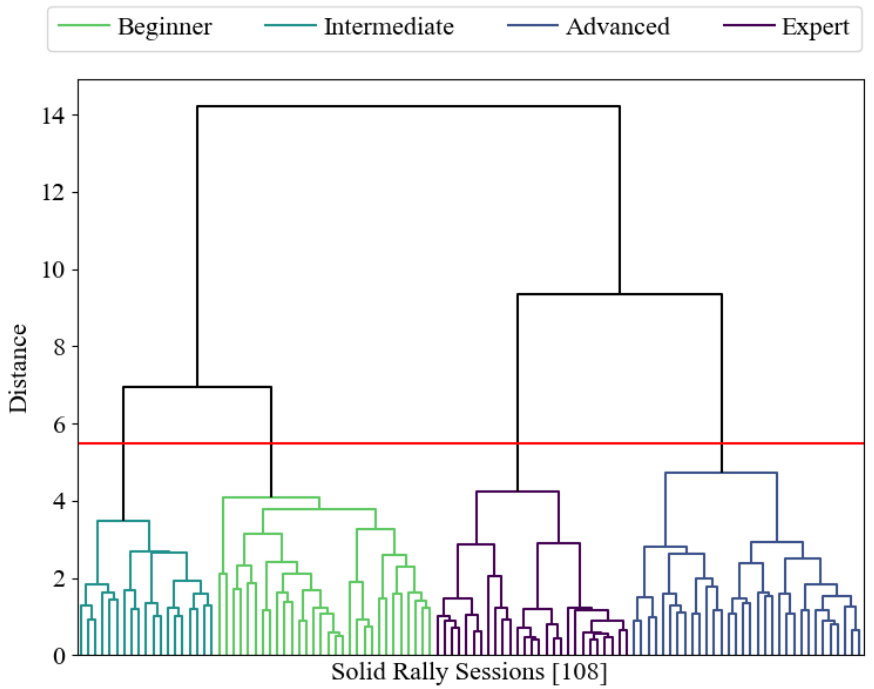

Clustering of aggregated session data, revealing 4 clusters of players with different in-game performance characteristics.

Abstract: Using artificial intelligence (AI) to automatically test a game remains a critical challenge for the development of richer and more complex game worlds and for the advancement of AI at large. One of the most promising methods for achieving that long-standing goal is the use of generative AI agents, namely procedural personas, that attempt to imitate particular playing behaviors which are represented as rules, rewards, or human demonstrations. All research efforts for building those generative agents, however, have focused solely on playing behavior which is arguably a narrow perspective of what a player actually does in a game. Motivated by this gap in the existing state of the art, in this paper we extend the notion of behavioral procedural personas to cater for player experience, thus examining generative agents that can both behave and experience their game as humans would. For that purpose, we employ the Go-Explore reinforcement learning paradigm for training human-like procedural personas, and we test our method on behavior and experience demonstrations of more than 100 players of a racing game. Our findings suggest that the generated agents exhibit distinctive play styles and experience responses of the human personas they were designed to imitate. Importantly, it also appears that experience, which is tied to playing behavior, can be a highly informative driver for better behavioral exploration.

in Proceedings of the Foundations on Digital Games Conference, 2022. BibTex

The Arousal video Game AnnotatIoN (AGAIN) Dataset

David Melhart, Antonios Liapis and Georgios N. Yannakakis

The nine games played and annotated as part of the AGAIN dataset. Top row shows the three racing games (TinyCars, Solid Rally, Apex Speed), mid row shows the three shooter games (Heist!, TopDown, Shootout), and bottom row shows the three platformer games (Endless, Pirates!, Run'n'gun).

Abstract: How can we model affect in a general fashion, across dissimilar tasks, and to which degree are such general representations of affect even possible? To address such questions and enable research towards general affective computing, this paper introduces The Arousal video Game AnnotatIoN (AGAIN) dataset. AGAIN is a large-scale affective corpus that features over 1,100 in-game videos (with corresponding gameplay data) from nine different games, which are annotated for arousal from 124 participants in a first-person continuous fashion. Even though AGAIN is created for the purpose of investigating the generality of affective computing across dissimilar tasks, affect modelling can be studied within each of its 9 specific interactive games. To the best of our knowledge AGAIN is the largest - over 37 hours of annotated video and game logs - and most diverse publicly available affective dataset based on games as interactive affect elicitors.

in IEEE Transactions on Affective Computing 13(4), 2022. BibTex

RankNEAT: Outperforming Stochastic Gradient Search in Preference Learning Tasks

Kosmas Pinitas, Konstantinos Makantasis, Antonios Liapis and Georgios N. Yannakakis

Eigen-CAM visualization of game footage features that impact player arousal predictions in a platformer game.

Abstract: Stochastic gradient descent (SGD) is a premium optimization method for training neural networks, especially for learning objectively defined labels such as image objects and events. When a neural network is instead faced with subjectively defined labels - such as human demonstrations or annotations - SGD may struggle to explore the deceptive and noisy loss landscapes caused by the inherent bias and subjectivity of humans. While neural networks are often trained via preference learning algorithms in an effort to eliminate such data noise, the de facto training methods rely on gradient descent. Motivated by the lack of empirical studies on the impact of evolutionary search to the training of preference learners, we introduce the RankNEAT algorithm which learns to rank through neuroevolution of augmenting topologies. We test the hypothesis that RankNEAT outperforms traditional gradient-based preference learning within the affective computing domain, in particular predicting annotated player arousal from the game footage of three dissimilar games. RankNEAT yields superior performances compared to the gradient-based preference learner (RankNet) in the majority of experiments since its architecture optimization capacity acts as an efficient feature selection mechanism, thereby, eliminating overfitting. Results suggest that RankNEAT is a viable and highly efficient evolutionary alternative to preference learning.

in Proceedings of the Genetic and Evolutionary Computation Conference, 2022. BibTex

AffectGAN: Affect-Based Generative Art Driven by Semantics

Theodoros Galanos, Antonios Liapis and Georgios N. Yannakakis

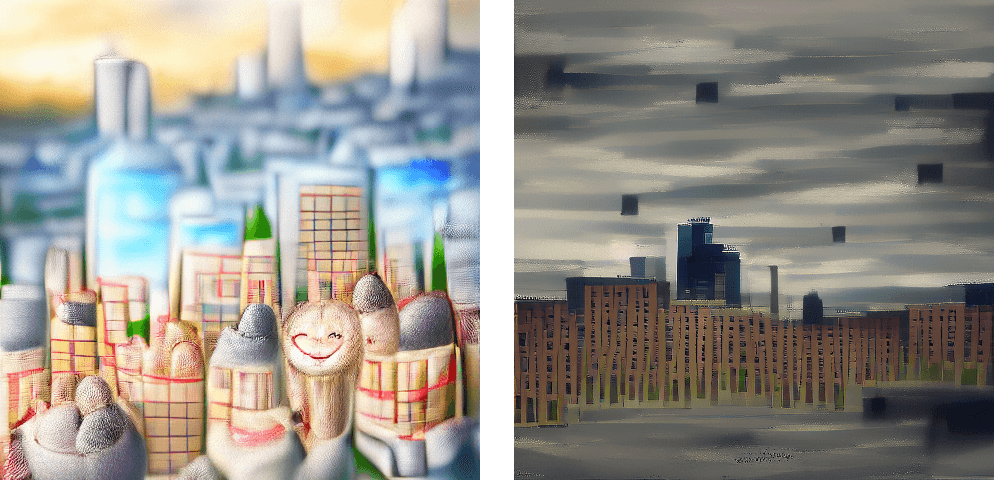

Two images generated via AffectGAN for a cityscape prompt: the left image is generated for "a happy cityscape" and the right image is generated for "a depressed cityscape".

Abstract: This paper introduces a novel method for generating artistic images that express particular affective states. Leveraging state-of-the-art deep learning methods for visual generation (through generative adversarial networks), semantic models from OpenAI, and the annotated dataset of the visual art encyclopedia WikiArt, our AffectGAN model is able to generate images based on specific or broad semantic prompts and intended affective outcomes. A small dataset of 32 images generated by AffectGAN is annotated by 50 participants in terms of the particular emotion they elicit, as well as their quality and novelty. Results show that for most instances the intended emotion used as a prompt for image generation matches the participants' responses. This small-scale study brings forth a new vision towards blending affective computing with computational creativity, enabling generative systems with intentionality in terms of the emotions they wish their output to elicit.

in Proceedings of the ACII Workshop on What's Next in Affect Modeling?, 2021. BibTex

Go-Blend Behavior and Affect

Matthew Barthet, Antonios Liapis and Georgios N. Yannakakis

The simple platformer game "Endless Runner" is used as a test case for an agent controller that combines behavioral rewards (playing to win) with affect rewards (playing to experience) based on human users' annotations of arousal during their own playthroughs.

Abstract: This paper proposes a paradigm shift for affective computing by viewing the affect modeling task as a reinforcement learning process. According to our proposed framework the context (environment) and the actions of an agent define the common representation that interweaves behavior and affect. To realise this framework we build on recent advances in reinforcement learning and use a modified version of the Go-Explore algorithm which has showcased supreme performance in hard exploration tasks. In this initial study, we test our framework in an arcade game by training Go-Explore agents to both play optimally and attempt to mimic human demonstrations of arousal. We vary the degree of importance between optimal play and arousal imitation and create agents that can effectively display a palette of affect and behavioral patterns. Our Go-Explore implementation not only introduces a new paradigm for affect modeling; it empowers believable AI-based game testing by providing agents that can blend and express a multitude of behavioral and affective patterns.

in Proceedings of the ACII Workshop on What's Next in Affect Modeling?, 2021. BibTex

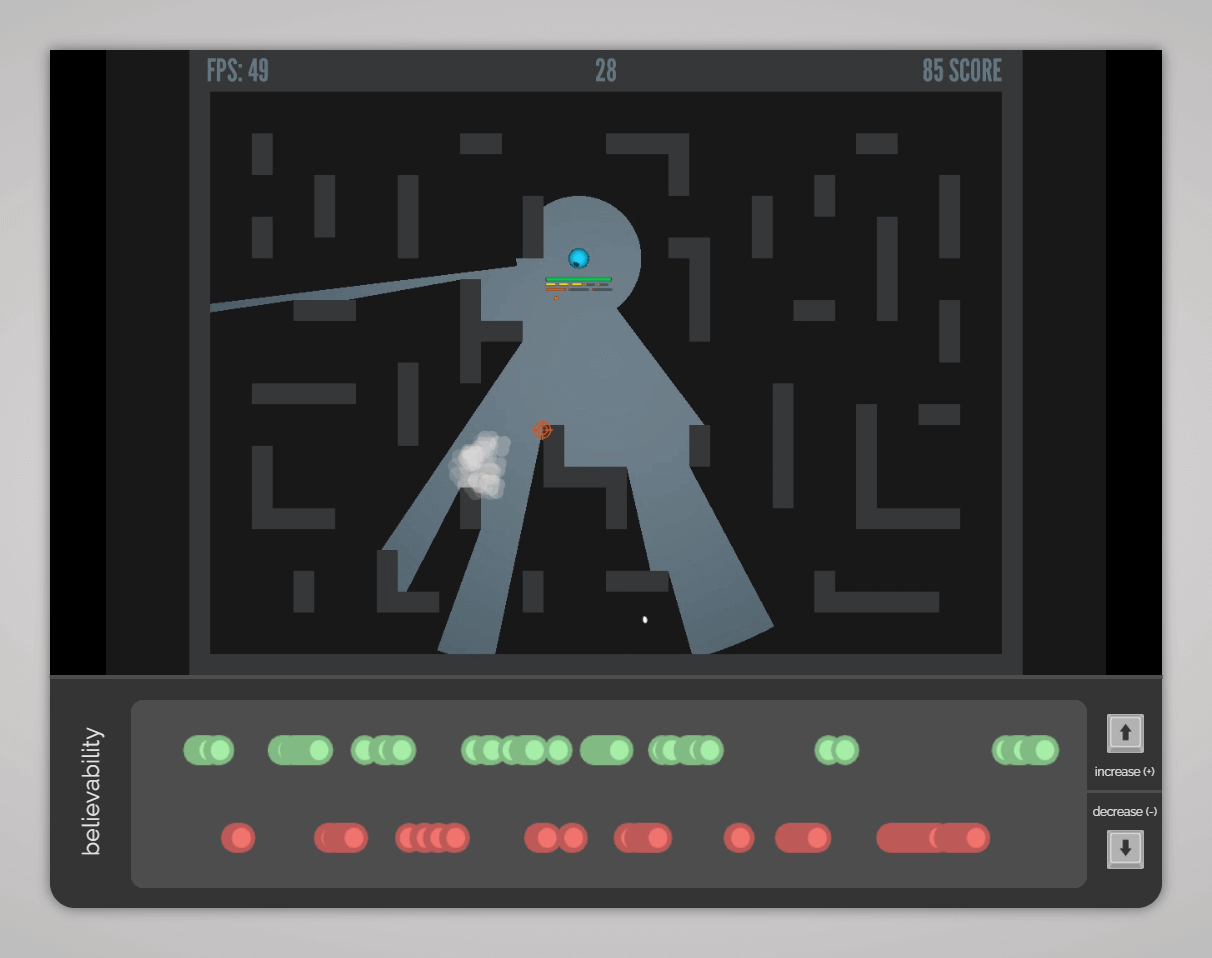

Discrete versus Ordinal Time-Continuous Believability Assessment

Cristiana Pacheco, David Melhart, Antonios Liapis, Georgios N. Yannakakis and Diego Perez-Liebana

Abstract: What is believability? And how do we assess it? These questions remain a challenge in human-computer interaction and games research. When assessing the believability of agents, researchers opt for an overall view of believability reminiscent of the Turing test. Current evaluation approaches have proven to be diverse and, thus, have yet to establish a framework. In this paper, we propose treating believability as a time-continuous phenomenon. We have conducted a study in which participants play a one-versus-one shooter game and annotate the character's believability. They face two different opponents which present different behaviours. In this novel process, these annotations are done moment-to-moment using two different annotation schemes: BTrace and RankTrace. This is followed by the user's believability preference between the two playthroughs, effectively allowing us to compare the two annotation tools and time-continuous assessment with discrete assessment. Results suggest that a binary annotation tool could be more intuitive to use than its continuous counterpart and provides more information on context. We conclude that this method may offer a necessary addition to current assessment techniques.

in Proceedings of the ACII Workshop on Multimodal Analyses enabling Artificial Agents in Human-Machine Interaction (MA3HMI), 2021. BibTex

Privileged Information for Modeling Affect In The Wild

Konstantinos Makantasis, David Melhart, Antonios Liapis and Georgios N. Yannakakis

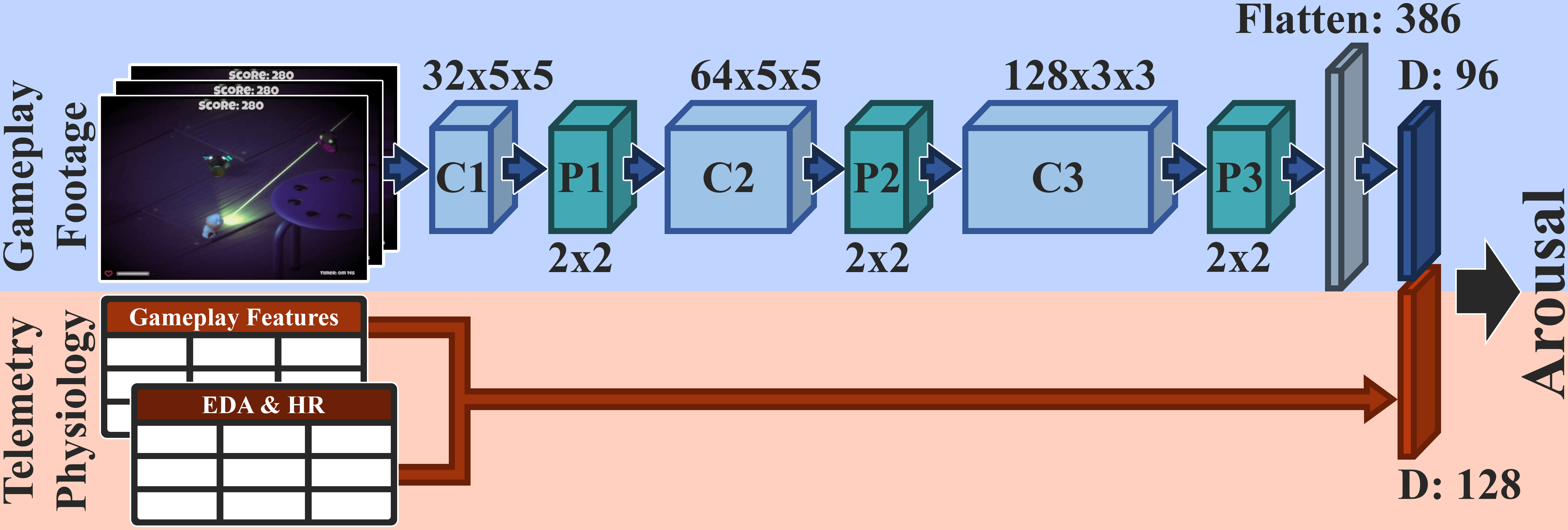

Gameplay footage in the student model is combined with physiological signals and game telemetry in the teacher model. In the wild, the privileged information (biosignal and game telemetry data) is unavailable.

Abstract: A key challenge of affective computing research is discovering ways to reliably transfer affect models that are built in the laboratory to real world settings, namely in the wild. The existing gap between in vitro} and in vivo affect applications is mainly caused by limitations related to affect sensing including intrusiveness, hardware malfunctions, availability of sensors, but also privacy and security. As a response to these limitations in this paper we are inspired by recent advances in machine learning and introduce the concept of privileged information for operating affect models in the wild. The presence of privileged information enables affect models to be trained across multiple modalities available in a lab setting and ignore modalities that are not available in the wild with no significant drop in their modeling performance. The proposed privileged information framework is tested in a game arousal corpus that contains physiological signals in the form of heart rate and electrodermal activity, game telemetry, and pixels of footage from two dissimilar games that are annotated with arousal traces. By training our arousal models using all modalities (in vitro) and using solely pixels for testing the models (in vivo), we reach levels of accuracy obtained from models that fuse all modalities both for training and testing. The findings of this paper make a decisive step towards realizing affect interaction in the wild.

in Proceedings of the IEEE International Conference on Affective Computing and Intelligent Interaction, 2021. BibTex

Architectural Form and Affect: A Spatiotemporal Study of Arousal

Emmanouil Xylakis, Antonios Liapis and Georgios N. Yannakakis

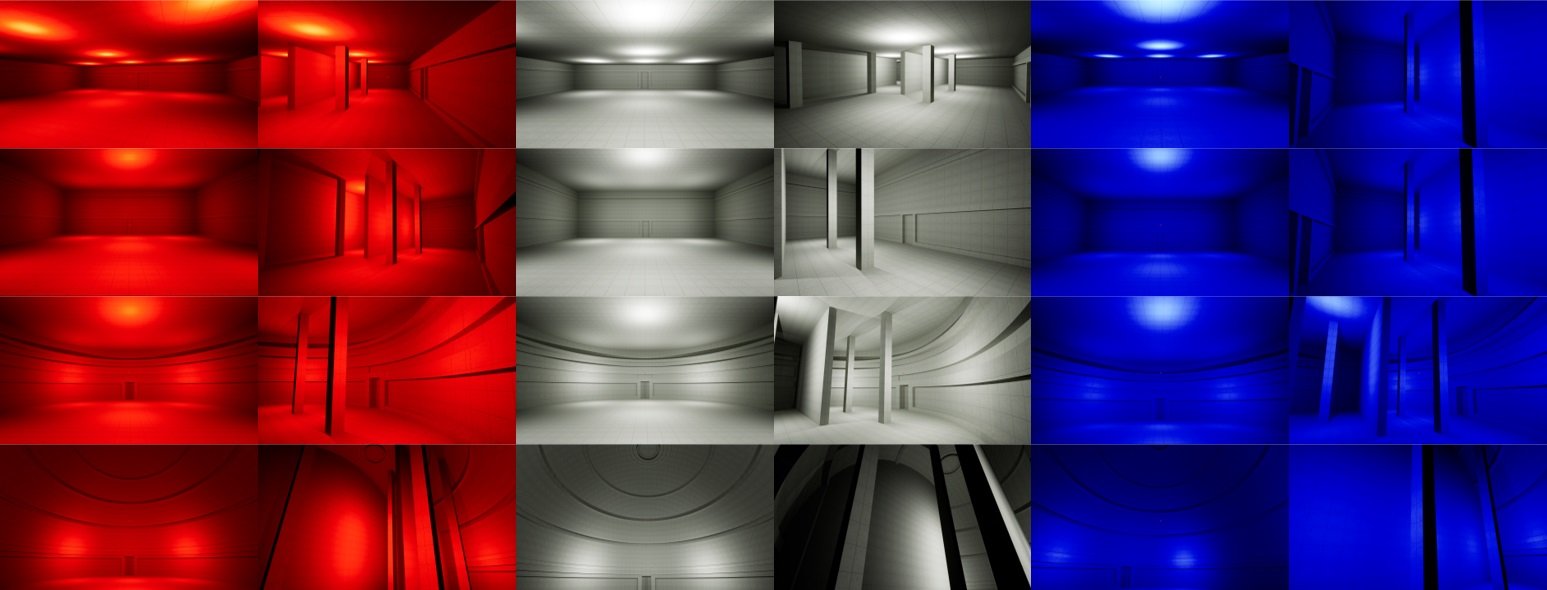

First-person views of the 24 rooms examined in the AffRooms corpus, while a player navigates through them.

Abstract: How does the form of our surroundings impact the ways we feel? This paper extends the body of research on the effects that space and light have on emotion by focusing on critical features of architectural form and illumination colors and their spatiotemporal impact on arousal. For that purpose, we solicited a corpus of spatial transitions in video form, lasting over 60 minutes, annotated by three participants in terms of arousal in a time-continuous and unbounded fashion. We process the annotation traces of that corpus in a relative fashion, focusing on the direction of arousal changes (increasing or decreasing) as affected by changes between consecutive rooms. Results show that properties of the form such as curved or complex spaces align highly with increased arousal. The analysis presented in this paper sheds some initial light in the relationship between arousal and core spatiotemporal features of form that is of particular importance for the affect-driven design of architectural spaces.

in Proceedings of the IEEE International Conference on Affective Computing and Intelligent Interaction, 2021. BibTex

Trace It Like You Believe It: Time-Continuous Believability Prediction

Cristiana Pacheco, David Melhart, Antonios Liapis, Georgios N. Yannakakis and Diego Perez-Liebana

Moment-to-moment believability annotation with discrete binary labels (BTrace) through PAGAN.

Abstract: Assessing the believability of agents, characters and simulated actors is a core challenge for human computer interaction. While numerous approaches are suggested in the literature, they are all limited to discrete and low-granularity representations of believable behavior. In this paper we view believability, for the first time, as a time-continuous phenomenon and we explore the suitability of two different affect annotation schemes for its assessment. In particular, we study the degree to which we can predict character believability in a continuous fashion through a two-player game study. The game features various opponent behaviors that are assessed for their believability by 89 participants that played the game and then annotated their recorded playthrough. Random forest models are then trained to predict believability based on ad-hoc designed in-game features. Results suggest that a discrete annotation method leads to a more robust assessment of the ground truth and subsequently better modelling performance. Our best models are able to predict a change in perceived believability with a 72.5% accuracy on average (up to 90% in the best cases) in a time-continuous manner.

in Proceedings of the IEEE International Conference on Affective Computing and Intelligent Interaction, 2021. BibTex

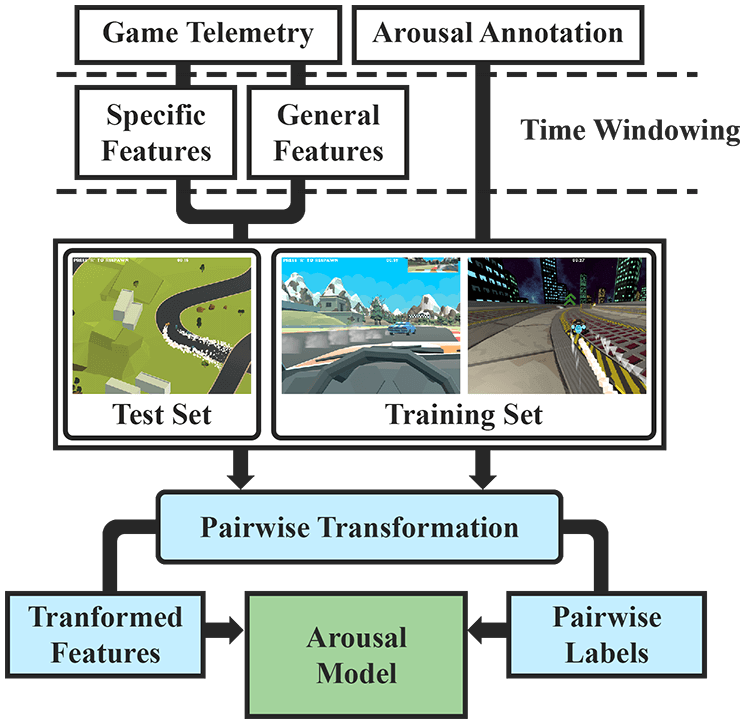

Towards General Models of Player Experience: A Study Within Genres

David Melhart, Antonios Liapis and Georgios N. Yannakakis

Genre-based modelling pipeline for modelling arousal across different games of the same genre.

Abstract: To which degree can abstract gameplay metrics capture the player experience in a general fashion within a game genre? In this comprehensive study we address this question across three different videogame genres: racing, shooter, and platformer games. Using high-level gameplay features that feed preference learning models we are able to predict arousal accurately across different games of the same genre in a large-scale dataset of over 1,000 arousal-annotated play sessions. Our genre models predict changes in arousal with up to 74% accuracy on average across all genres and 86% in the best cases. We also examine the feature importance during the modelling process and find that time-related features largely contribute to the performance of both game and genre models. The prominence of these game-agnostic features show the importance of the temporal dynamics of the play experience in modelling, but also highlight some of the challenges for the future of general affect modelling in games and beyond.

in Proceedings of the IEEE Conference on Games, 2021. BibTex

I Feel I Feel You: A Theory of Mind Experiment in Games

David Melhart, Georgios N. Yannakakis and Antonios Liapis

The MAZING game was used as a testbed, with an artificial agent exhibiting frustrated behavior based on the player's actions or their own.

Abstract: In this study into the player's emotional theory of mind of gameplaying agents, we investigate how an agent's behaviour and the player's own performance and emotions shape the recognition of a frustrated behaviour. We focus on the perception of frustration as it is a prevalent affective experience in human-computer interaction. We present a testbed game tailored towards this end, in which a player competes against an agent with a frustration model based on theory. We collect gameplay data, an annotated ground truth about the player's appraisal of the agent's frustration, and apply face recognition to estimate the player's emotional state. We examine the collected data through correlation analysis and predictive machine learning models, and find that the player's observable emotions are not correlated highly with the perceived frustration of the agent. This suggests that our subject's theory of mind is a cognitive process based on the gameplay context. Our predictive models - using ranking support vector machines - corroborate these results, yielding moderately accurate predictors of players' theory of mind.

in Kunstliche Intelligenz, vol. 34, pp. 45–55. Springer, 2020. BibTex

From Pixels to Affect: A Study on Games and Player Experience

Konstantinos Makantasis, Antonios Liapis and Georgios N. Yannakakis

GRAD-CAM visualization, showing the areas of the game screen which corresponds to a high activation of the model of players' arousal level.

Abstract: Is it possible to predict the affect of a user just by observing her behavioral interaction through a video? How can we, for instance, predict a user's arousal in games by merely looking at the screen during play? In this paper we address these questions by employing three dissimilar deep convolutional neural network architectures in our attempt to learn the underlying mapping between video streams of gameplay and the player's arousal. We test the algorithms in an annotated dataset of 50 gameplay videos of a survival shooter game and evaluate the deep learned models' capacity to classify high vs low arousal levels. Our key findings with the demanding leave-one- video-out validation method reveal accuracies of over 78% on average and 98% at best. While this study focuses on games and player experience as a test domain, the findings and methodology are directly relevant to any affective computing area, introducing a general and user-agnostic approach for modeling affect.

in Proceedings of the International Conference on Affective Computing and Intelligent Interaction, 2019. BibTex

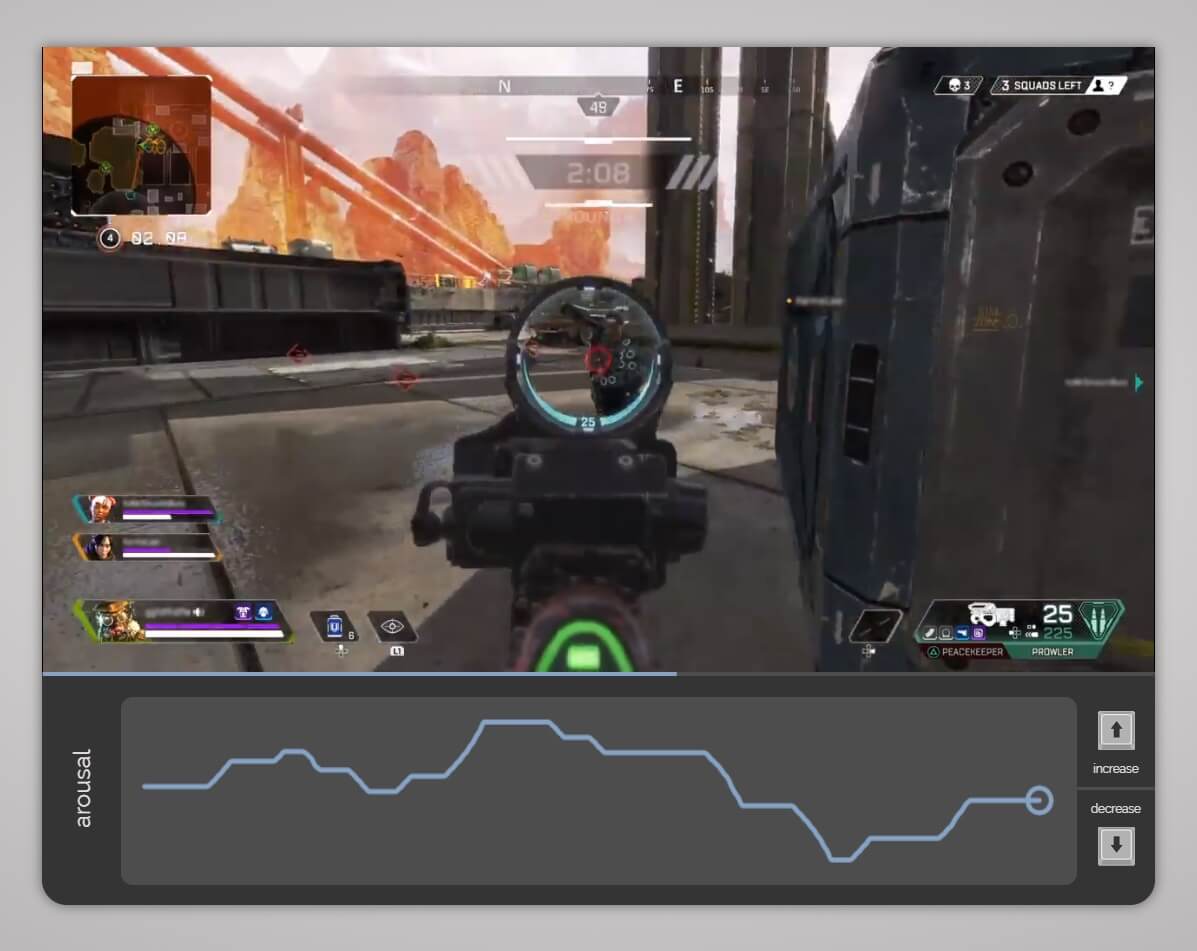

PAGAN: Video Affect Annotation Made Easy

David Melhart, Antonios Liapis and Georgios N. Yannakakis

PAGAN interface for end-users to annotate their recorded gameplay (in this case) through the RankTrace protocol.

Abstract: How could we gather affect annotations in a rapid, unobtrusive, and accessible fashion? How could we still make sure that these annotations are reliable enough for data-hungry affect modelling methods? This paper addresses these questions by introducing PAGAN, an accessible, general-purpose, online platform for crowdsourcing affect labels in videos. The design of PAGAN overcomes the accessibility limitations of existing annotation tools, which often require advanced technical skills or even the on-site involvement of the researcher. Such limitations often yield affective corpora that are restricted in size, scope and use, as the applicability of modern data-demanding machine learning methods is rather limited. The description of PAGAN is accompanied by an exploratory study which compares the reliability of three continuous annotation tools currently supported by the platform. Our key results reveal higher inter-rater agreement when annotation traces are processed in a relative manner and collected via unbounded labelling.

in Proceedings of the International Conference on Affective Computing and Intelligent Interaction, 2019. BibTex

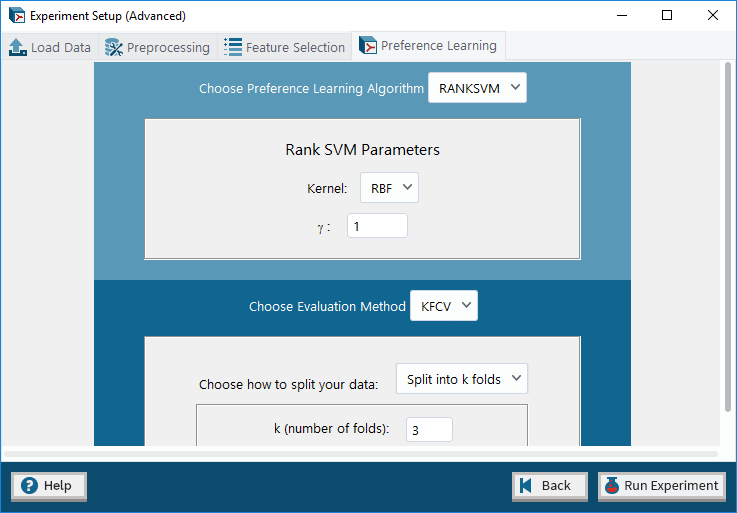

PyPLT: Python Preference Learning Toolbox

Elizabeth Camilleri, Georgios N. Yannakakis, David Melhart and Antonios Liapis

Editor interface for pyPLT, where advanced users can customize their preference learning algorithms' parameters.

Abstract: There is growing evidence suggesting that subjective values such as emotions are intrinsically relative and that an ordinal approach is beneficial to their annotation and analysis. Ordinal data processing yields more reliable, valid and general predictive models, and preference learning algorithms have shown a strong advantage in deriving computational models from such data. To enable the extensive use of ordinal data processing and preference learning, this paper introduces the Python Preference Learning Toolbox. The toolbox is open source, features popular preference learning algorithms and methods, and is designed to be accessible to a wide audience of researchers and practitioners. The toolbox is evaluated with regards to both the accuracy of its predictive models across two affective datasets and its usability via a user study. Our key findings suggest that the implemented algorithms yield accurate models of affect while its graphical user interface is suitable for both novice and experienced users.

in Proceedings of the International Conference on Affective Computing and Intelligent Interaction, 2019. BibTex

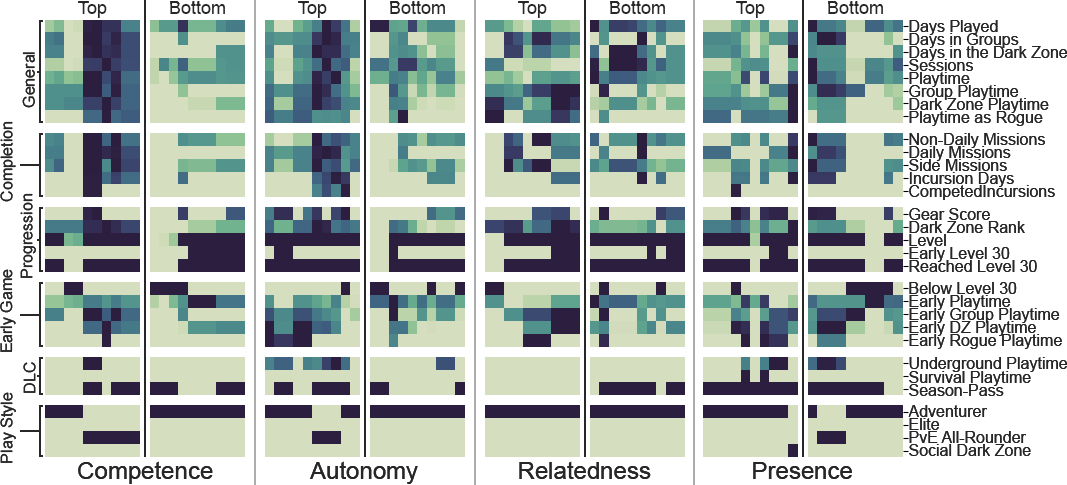

Your Gameplay Says It All: Modelling Motivation in Tom Clancy's The Division

David Melhart, Ahmad Azadvar, Alessandro Canossa, Antonios Liapis and Georgios N. Yannakakis

Highest and lowest scoring players in terms of each of the four predicted motivation factors of the Ubisoft Perceived Experience Questionnaire.

Abstract: Is it possible to predict the motivation of players just by observing their gameplay data? Even if so, how should we measure motivation in the first place? To address the above questions, on the one end, we collect a large dataset of gameplay data from players of the popular game Tom Clancy's The Division. On the other end, we ask them to report their levels of competence, autonomy, relatedness and presence using the Ubisoft Perceived Experience Questionnaire. After processing the survey responses in an ordinal fashion we employ preference learning methods based on support vector machines to infer the mapping between gameplay and the reported four motivation factors. Our key findings suggest that gameplay features are strong predictors of player motivation as the best obtained models reach accuracies of near certainty, from 92% up to 94% on unseen players.

in Proceedings of the IEEE Conference on Games, 2019. BibTex

A Study on Affect Model Validity: Nominal vs Ordinal Labels

David Melhart, Konstantinos Sfikas, Giorgos Giannakakis, Georgios N. Yannakakis and Antonios Liapis

Abstract: The question of representing emotion computationally remains largely unanswered: popular approaches require annotators to assign a magnitude (or a class) of some emotional dimension, while an alternative is to focus on the relationship between two or more options. Recent evidence in affective computing suggests that following a methodology of ordinal annotations and processing leads to better reliability and validity of the model. This paper compares the generality of classification methods versus preference learning methods in predicting the levels of arousal in two widely used affective datasets. findings of this initial study further validate the hypothesis that approaching affect labels as ordinal data and building models via preference learning yields models of better validity.

in Proceedings of the IJCAI workshop on AI and Affective Computing, 2018. BibTex

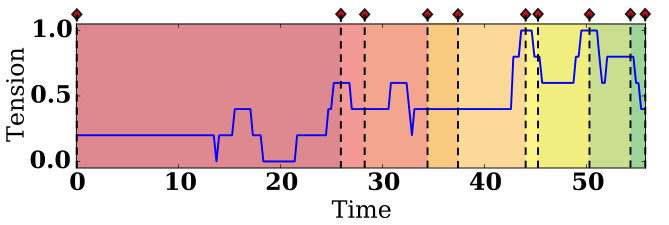

RankTrace: Relative and Unbounded Affect Annotation

Phil Lopes, Georgios N. Yannakakis and Antonios Liapis

Player-provided annotation trace of tension in a Sonancia playthrough. The trace is split into continuous windows based on game events, when the player changed rooms.

Abstract: How should annotation data be processed so that it can best characterize the ground truth of affect? This paper attempts to address this critical question by testing various methods of processing annotation data on their ability to capture phasic elements of skin conductance. Towards this goal the paper introduces a new affect annotation tool, RankTrace, that allows for the annotation of affect in a continuous yet unbounded fashion. RankTrace is tested on first-person annotations of tension elicited from a horror video game. The key findings of the paper suggest that the relative processing of traces via their mean gradient yields the best and most robust predictors of phasic manifestations of skin conductance.

In Proceedings of the International Conference on Affective Computing and Intelligent Interaction, 2017. BibTex

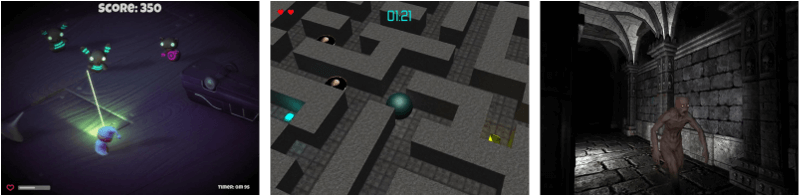

Towards General Models of Player Affect

Elizabeth Camilleri, Georgios N. Yannakakis and Antonios Liapis

The three games played and annotated by users; the paper tests how well a computational model of arousal which learned from annotations in two games predicts annotations in the third.

Abstract: While the primary focus of affective computing has been on constructing efficient and reliable models of affect, the vast majority of such models are limited to a specific task and domain. This paper, instead, investigates how computational models of affect can be general across dissimilar tasks; in particular, in modeling the experience of playing very different video games. We use three dissimilar games whose players annotated their arousal levels on video recordings of their own playthroughs. We construct models mapping ranks of arousal to skin conductance and gameplay logs via preference learning and we use a form of cross-game validation to test the generality of the obtained models on unseen games. Our initial results comparing between absolute and relative measures of the arousal annotation values indicate that we can obtain more general models of player affect if we process the model output in an ordinal fashion.

In Proceedings of the International Conference on Affective Computing and Intelligent Interaction, 2017. BibTex

Modelling Affect for Horror Soundscapes

Phil Lopes, Antonios Liapis and Georgios N. Yannakakis

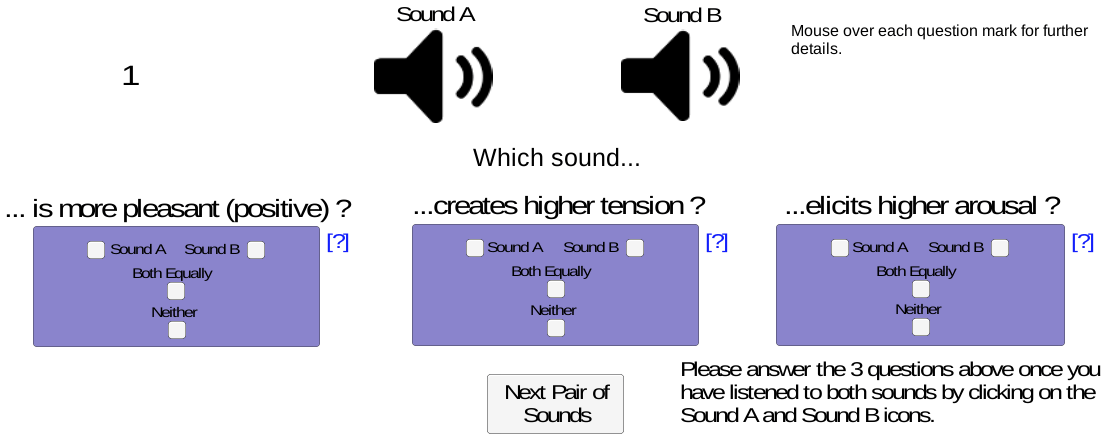

Web interface through which users ranked two sounds in terms of tension, arousal and valence.

Abstract: The feeling of horror within movies or games relies on the audience's perception of a tense atmosphere - often achieved through sound accompanied by the on-screen drama - guiding its emotional experience throughout the scene or game-play sequence. These progressions are often crafted through an a priori knowledge of how a scene or game-play sequence will playout, and the intended emotional patterns a game director wants to transmit. The appropriate design of sound becomes even more challenging once the scenery and the general context is autonomously generated by an algorithm. Towards realizing sound-based affective interaction in games this paper explores the creation of computational models capable of ranking short audio pieces based on crowdsourced annotations of tension, arousal and valence. Affect models are trained via preference learning on over a thousand annotations with the use of support vector machines, whose inputs are low-level features extracted from the audio assets of a comprehensive sound library. The models constructed in this work are able to predict the tension, arousal and valence elicited by sound, respectively, with an accuracy of approximately 65%, 66% and 72%.

IEEE Transactions on Affective Computing, vol. 10, no 2, pp. 209-222, 2019. BibTex