This article has been published at the Dagstuhl Seminar 22251 "Human-Game AI Interaction". The original publication, along with its bibtex entry and other information can be found here.

Artificial Intelligence (AI) in games has already been extensively used for the purposes of modeling players [26, 19]. This working group viewed issues of player modeling through the lens of personalized assistance, focusing on the horizon(s) that such models could use to learn from the past and to predict the future. While the scope and purpose of player models can vary [19], this working group focused on models of an individual person/player which can be generative in scope, i.e. generating "data where a human player could otherwise be consulted" [19].

Generators build upon design theories both explicitly and implicitly. Explicit models result from deliberate choices of a system designer to encode a form of the design knowledge for a domain (e.g., mazes) or a design process (e.g., the Mechanics, Dynamics, and Aesthetic framework). Implicit models are encoded within unacknowledged commitments expressed through algorithmic details (e.g., data structures, representation, generative methods) that may require critical analysis to be uncovered. Uncovering and acknowledging both the explicitly and implicitly encoded theories about the design process is key to learning from generators. Comparing them to each other and generalizing lessons learned from individual systems will lead to a broader, more inclusive, formal theory of game design. By examining the gap between generated products and exemplars made by human designers, it is possible to better understand the nature of the artifact being procedurally designed, thus building a formal theory of the artifacts being designed as well as the process taken to design them.

What constitutes long-term personalization?

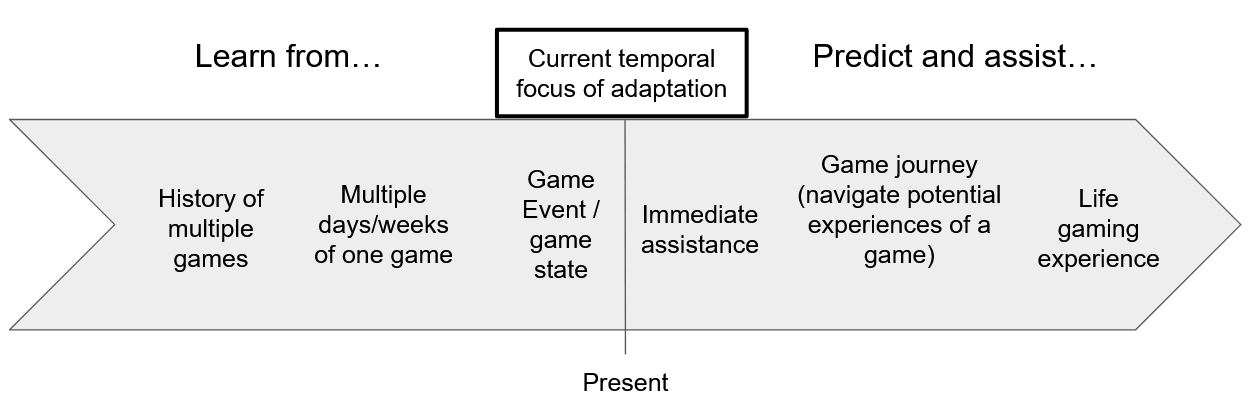

Horizon of past user activities and horizon of predicted future trends, used for choosing actions to take on the part of the assistive AI.

As a central issue of the topic tackled by the working group was the "long-term" aspect, it is important to define the scope of such temporal and contextual information. As depicted in the above figure, the main questions on this aspect revolve around (a) the horizon of past user actions (and their context) that the model will learn from, and (b) the horizon of future expectations that the AI can predict and assist towards.

The working group identified that a personalized computational model could learn patterns from a very long-term history of player behavior at low granularity (with metrics such as game purchase behavior and/or playtime), a mid-term history within one game (spanning e.g. multiple days or weeks), or short-term history spanning a few actions or game-states within the current game context. In terms of what predictions the model could make, the working group similarly identified short-term predictions regarding actions within the current game session (e.g. whether the player would fail in an upcoming challenge), mid-term predictions regarding behaviors within the same game (e.g. which parts of future game content the player would enjoy and how), or longer-term predictions (e.g. when the player would quit this game, or which games they would pick up after it).

We note here that an assistant AI does not necessarily require prediction of future states in order to provide assistance, as it can operate without output [27] by detecting patterns between this player and a broader player corpus through unsupervised learning (as would be the case for recommender systems, for instance). However, the context of the assistance similarly fits the same time-scales as player predictions, from short-term assistance regarding e.g. a current problem the player is facing in this phase/location of the game versus long-term assistance in terms of e.g. similar games they can play once they finish this game.

At this level of granularity, there is an abundance of examples to draw from in commercial and research applications modeling past and future horizons. Indicatively, player profiles on Steam take into account game purchases in order to provide similar games to recommend in the player's discovery queue [12] (long-term past for long-term future). On the other end of the spectrum, AI replicants [16] that follow the low-level decision-making of a single player in a singular context (e.g. level layout and opponent) can be used to simulate the next in-game actions (short-term past for short-term future). On a more realistic mid-term assistance, the Drivatar models [20] learn how to drive as a player would and can generate complete playthroughs through sequences of short-term decisions in the style of the player, even in unseen tracks (mid-term past for short-term future). While not explicitly aimed to assist players, similar work on churn prediction [8] focuses on learning patterns from a player's long- or mid-term gameplaying and purchase history in order to predict when players may quit playing the game (long-/mid-term past for mid-term future); these predictions are often used to provide players with interesting content or power-ups in the short-term in order to delay players from dropping out.

How can the AI assist a player?

While to a large extent the algorithms for player modeling are already mature, a more pertinent issue relates to the type of assistance that such models can offer to players. Through extensive brainstorming, the working group identified the following non-exhaustive list of assistant AI actions:

- Game Selection: The assistant AI can suggest new games for the player to explore. In this level of granularity, the AI does not adapt any game content and relies on human-authored games that are better suited for this player.

- Modification of an initial game state: The assistant AI provides the player with new content within the same game, modifying the initial state via e.g. a new game level to explore [25], making a new mechanic available [1, 3], or new opponent abilities [13, 18]. The distinction here is that the assistant AI adapts the possibility space offered to the player without hand-holding the player on how they should take advantage of these possibilities. This assistance is also highly relevant in terms of generating end-game content through e.g. recombining existing hand-authored content in novel ways that match player preferences, performance, or expectations.

- Mechanics adaptation: The assistant AI interferes in a more granular manner on the moment-to-moment playthrough by adjusting the game mechanics themselves. This could for example take the form of aim assistance by increasing the leniency on what constitutes a hit in a shooter game (e.g. [23]). This same adaptation, due to its subtle nature, could also be used for increasing accessibility in games that would normally require fast reflexes [21].

- Adapting the player's behavior towards a normative gameplay goal: Rather than changing the game according to the player's preferences, this assistant AI shoehorns the player into playing the game as-is according to the designers' (rather than the players') intentions. This assistive AI can take two complementary roles, guiding players during their playthrough towards intended outcomes by scaffolding and mediating their learning and by nudging them towards desirable behaviors. Scaffolding can be done through generated tutorials on overlooked game mechanics [7] or on-demand hints regarding actions - or in-game knowledge - needed to overcome a current challenge (e.g. a puzzle). Nudging and priming on the other hand can be achieved by making certain decisions seem more appealing, and has been used extensively in both advertising [24] and teaching [4]. In order to guide a player towards a specific level traversal path, for example, a specific path may be adapted to have less clutter in order to guide players through it [22], sound emitters could be used to guide players towards their source [14], or user interface elements (e.g. quest markers) could be adapted to be more or less prominent. This type of assistance is perhaps the least explored academically in the context of games while also the most promising and realistic from the perspective of the game industry. This is relevant to the game industry as game developers can thus streamline a singular play experience, while taking advantage of existing - carefully crafted - assets without requiring unpredictable generation or adaptation.

- Providing Reflection and Explainability: The assistant AI provides feedback to the player regarding their performance or playstyle, allowing players themselves to reflect on how to improve the former or diversify the latter. While post-game summaries abound in games, the AI aspect can be leveraged to provide personalized feedback (e.g. focusing on presenting metrics that are important to this player, based on their personal model) or for highlighting aspects or portions of the playthrough where alternative decisions or actions could have led to better results - as a form of post-game scaffolding. Moreover, post-game visualizations can also serve to explain certain AI decisions or prompts during the game covered in other AI actions above. For example, in a racing game the player's trajectory on the track is shown in a post-game summary, juxtaposed with an AI driver's trajectory and highlighting the points where the AI detected (and verbalized) a hint towards course correction. Therefore this AI action can be a standalone component or an accompanying explanation for other AI actions.

The issue of assistance was central in this particular envisioned application of AI, specifically regarding how (in)visible the assistant would be (e.g. performing difficulty adjustment or aim assistance behind the scenes versus coaching players to better handle the game's challenges). Relatedly, whether the assistance would be on-demand by the player or always in effect would impact the type of assistance the AI can provide as well as issues of players' perception of the AI and explainability requirements. Based on the type of assistance and how it is presented to the player, such AI could take the role of salesperson, tutor, gamemaster, commentator, tour guide, and even as general on-demand virtual assistant similar to Siri or Google Assistant but within the game.

What can the AI assist towards?

As a final dimension regarding the goals of the assistant, the player model could be trained to focus on a variety of metrics or key performance indicators (KPIs) of the player. The following non-exhaustive list covers some KPIs of interest:

- Emotional state: a player's emotional state in the game. AI assistance that keeps track of and aims to improve such a KPI could tailor content towards the intended emotional state (e.g. fear [6] or stress [15] in horror games) or in order to course-correct in case experienced emotions are overwhelming (e.g. in games for rehabilitation [9]).

- Performance: the difficulty or challenge a player faces in the game and how they overcome it (e.g. number of retries or game score). AI assistance can be used to tailor the experience to the player's skills. This is the most traditional application through dynamic difficulty adjustment [10], but can be enhanced beyond invisible rubber-banding through e.g. on-demand coaching and personalized assistance.

- Coverage: how much of the game a player has explored (or tends to explore). Coverage can refer to spatial coverage (e.g. heatmap of the level), action coverage (e.g. whether the player makes use of all mechanics and dynamics [11] available to them), narrative coverage (e.g. which non-player-character relationships the player has focused on and how), or temporal coverage (e.g. build orders in strategy games).

- Learning: how much the player has mastered the game's mechanics (or concepts) and improved their repertoire. This KPI could be especially meaningful for assistance on mechanics that the player has overlooked (e.g. via hints and tutorials) or has trouble with (e.g. via aim assist that progressively becomes less pronounced). The aspects of the game that the player has mastered can also inform recommendations for future games that specifically offer additional challenges (or content) in these specific aspects.

- Intentions: why and how a player likes to engage with the game. This is particularly meaningful for detecting cases where certain play behaviors are not due to poor performance but due to conscious decisions to play subversively or towards the player's own goals. A broader example of this would be speedrun challenges [17], where assistance should be tailored not to guide players towards maximizing spatial coverage but towards shortcuts or skill-based shorter traversal paths.

- Social experience: how a player interacts with other players in the game. This KPI is mostly relevant to multi-player games with a social component, such as massively multiplayer online games rather than competitive brawlers or racing games. The AI model could capture cases of toxic behavior or interactions within and outside the game, and its "assistance" could include warnings in cases of toxic behavior [2] either to the perpetrator or to new players interacting with them.

- Monetization: how a player spends their real-world money in the game, and towards which content. This KPI is less relevant for the research purposes of this working group, but could be relevant for industrial use cases. Moreover, extensive AI research on churn prediction and analytics [5, 8] has been motivated by monetization and thus can not be overlooked. Ethical assistance in terms of this KPI could focus on the explainability and reflection aspect, highlighting to the player which purchase behaviors they tend to make and coaching them towards diversifying or reducing their in-game purchases.

Envisioned use-cases of assistant AI

After these high-level implications of personalized AI assistance were laid out, the working group focused on two practical examples, both including nudging player behaviors to better experience a game as-is (without new content being generated for it). The first example was focused on narrative-based games with explicit role-playing decision points. Speculative work under this more narrow use-case explored different ways of nudging the player's decisions in terms of their invasiveness (i.e. how much the AI takes over the decision-making) and the subconscious nature of the nudging (i.e. whether the player might understand that they are being manipulated). The second example was focused on assisting players to brake within a racing game, which relies on kinesthetic player behavior and the AI aims to reduce the challenge of an existing game. Speculative work to address this issue identified audiovisual feedback in real-time as the most intuitive way of AI assistance, exploring how audio or visual feedback could be more or less invasive (e.g. a popup foreshadowing the type of upcoming turn, versus a ghost trail of the ideal trajectory for taking the turn). The two practical examples allowed for some more in-depth discussion on the specific challenges that would need to be addressed when developing personalized AI assistants.

References

[1] Eric Butler, Adam M. Smith, Yun-En Liu, and Zoran Popovic. A mixed-initiative tool for designing level progressions in games. In Proceedings of the ACM Symposium on User Interface Software and Technology, page 377–386, 2013.

[2] Alessandro Canossa, Dmitry Salimov, Ahmad Azadvar, Casper Harteveld, and Georgios Yannakakis. For honor, for toxicity: Detecting toxic behavior through gameplay. Proceedings of the ACM CHIPLAY Conference, 2021.

[3] Michael Cook, Simon Colton, Azalea Raad, and Jeremy Gow. Mechanic Miner: Reflection-driven game mechanic discovery and level design. In Applications of Evolutionary Computation, volume 7835, LNCS. Springer, 2012.

[4] Mette Trier Damgaard and Helena Skyt Nielsen. Nudging in education. Economics of Education Review, 64:313–342, 2018.

[5] Magy Seif El-Nasr, Anders Drachen, and Alessandro Canossa. Game Analytics: Maximizing the Value of Player Data. Springer, 2013.

[6] Magy Seif El-Nasr, Simon Niedenthal, Igor Kenz, Priya Almeida, and Joseph Zupko. Dynamic lighting for tension in games. Game Studies, 7(1), 2007.

[7] Michael Cerny Green, Ahmed Khalifa, Gabriella A. B. Barros, and Julian Togelius. "Press Space to Fire": Automatic Video Game Tutorial Generation. In Proceedings of the AIIDE workshop on Experimental AI in Games, 2018.

[8] Fabian Hadiji, Rafet Sifa, Anders Drachen, Christian Thurau, Kristian Kersting, and Christian Bauckhage. Predicting player churn in the wild. In Proceedings of the IEEE Conference on Computational Intelligence in Games, 2014.

[9] Christoffer Holmgård, Georgios N. Yannakakis, Karen-Inge Karstoft, and Henrik Steen Andersen. Stress detection for PTSD via the StartleMart Game. In Proceedings of the Conference on Affective Computing and Intelligent Interaction, page 523–528, 2013.

[10] Robin Hunicke. The case for dynamic difficulty adjustment in games. In Proceedings of the International Conference on Advances in Computer Entertainment Technology, 2005.

[11] Robin Hunicke, Marc Leblanc, and Robert Zubek. MDA: A formal approach to game design and game research. In Proceedings of AAAI Workshop on the Challenges in Games AI, 2004.

[12] Erik Johnson. A deep dive into Steam's Discovery Queue 2. https://www.gamedeveloper.com/business/a-deep-dive-into-steam-s-discovery-queue, 2019. Accessed 6 July, 2022.

[13] Ahmed Khalifa, Scott Lee, Andy Nealen, and Julian Togelius. Talakat: Bullet hell generation through constrained map-elites. In Proceedings of the Genetic and Evolutionary Computation Conference, page 1047–1054, 2018.

[14] Daryl Marples, Duke Gledhill, and Pelham Carter. The effect of lighting, landmarks and auditory cues on human performance in navigating a virtual maze. In Proceedings of the Symposium on Interactive 3D Graphics and Games, 2020.

[15] Paraschos Moschovitis and Alena Denisova. Keep calm and aim for the head: Biofeedback-controlled dynamic difficulty adjustment in a horror game. IEEE Transactions on Games, 2022.

[16] Johannes Pfau, Antonios Liapis, Georg Volkmar, Georgios N. Yannakakis, and Rainer Malaka. Dungeons & Replicants: Automated game balancing via deep player behavior modeling. In Proceedings of the IEEE Conference on Games, 2020.

[17] Tom Phillips. World record Portal speedrun completed in 8 minutes. https://www.eurogamer.net/world-record-portal-speedrun-completed-in-8-minutes, 2012. Accessed 6 July, 2022.

[18] Kristin Siu, Eric Butler, and Alexander Zook. A programming model for boss encounters in 2d action games. In Proceedings of the AIIDE workshop on Experimental AI in Games, 2016.

[19] Adam M. Smith, Chris Lewis, Kenneth Hullet, Gillian Smith, and Anne Sullivan. An inclusive taxonomy of player modeling. Technical Report UCSC-SOE-11-13, 2011, University California Santa Cruz, 2011.

[20] Tommy Thompson. How Forza's Drivatar actually works. https://www.gamedeveloper.com/design/how-forza-s-drivatar-actually-works, 2021. Accessed 6 July, 2022.

[21] Tommy Thompson and Matthew Syrett. 'play your own way': Adapting a procedural framework for accessibility. In Proceedings of the FDG Workshop on Procedural Content Generation, 2018.

[22] Christopher W. Totten. An Architectural Approach to level Design, chapter Teaching in Levels through Visual Communication. CRC Press, 2014.

[23] Rodrigo Vicencio-Moreira, Regan L Mandryk, and Carl Gutwin. Balancing multiplayer first-person shooter games using aiming assistance. In 2014 IEEE Games Media Entertainment. IEEE, 2014.

[24] Markus Weinmann, Christoph Schneider, and Jan vom Brocke. Digital nudging. Business & Information Systems Engineering, 58:433–436, 2016.

[25] Georgios Yannakakis and Julian Togelius. Experience-driven procedural content generation. IEEE Transactions on Affective Computing, 2(3):147–161, 2011.

[26] Georgios N. Yannakakis, Pieter Spronck, Daniele Loiacono, and Elisabeth André. Player modeling. In Simon M. Lucas, Michael Mateas, Mike Preuss, Pieter Spronck, and Julian Togelius, editors, Artificial and Computational Intelligence in Games, volume 6 of Dagstuhl Follow-Ups, pages 45–59. Schloss Dagstuhl–Leibniz-Zentrum fuer Informatik, 2013.

[27] Georgios N. Yannakakis and Julian Togelius. Artificial Intelligence and Games. Springer, 2018. http://gameaibook.org.

This article has been published at the Dagstuhl Seminar 22251 "Human-Game AI Interaction". The original publication, along with its bibtex entry and other information can be found here.