This article has been published at the Dagstuhl Seminar 25292 "New Frontiers in AI for Game Design ". The original publication, along with its bibtex entry and other information will be available soon.

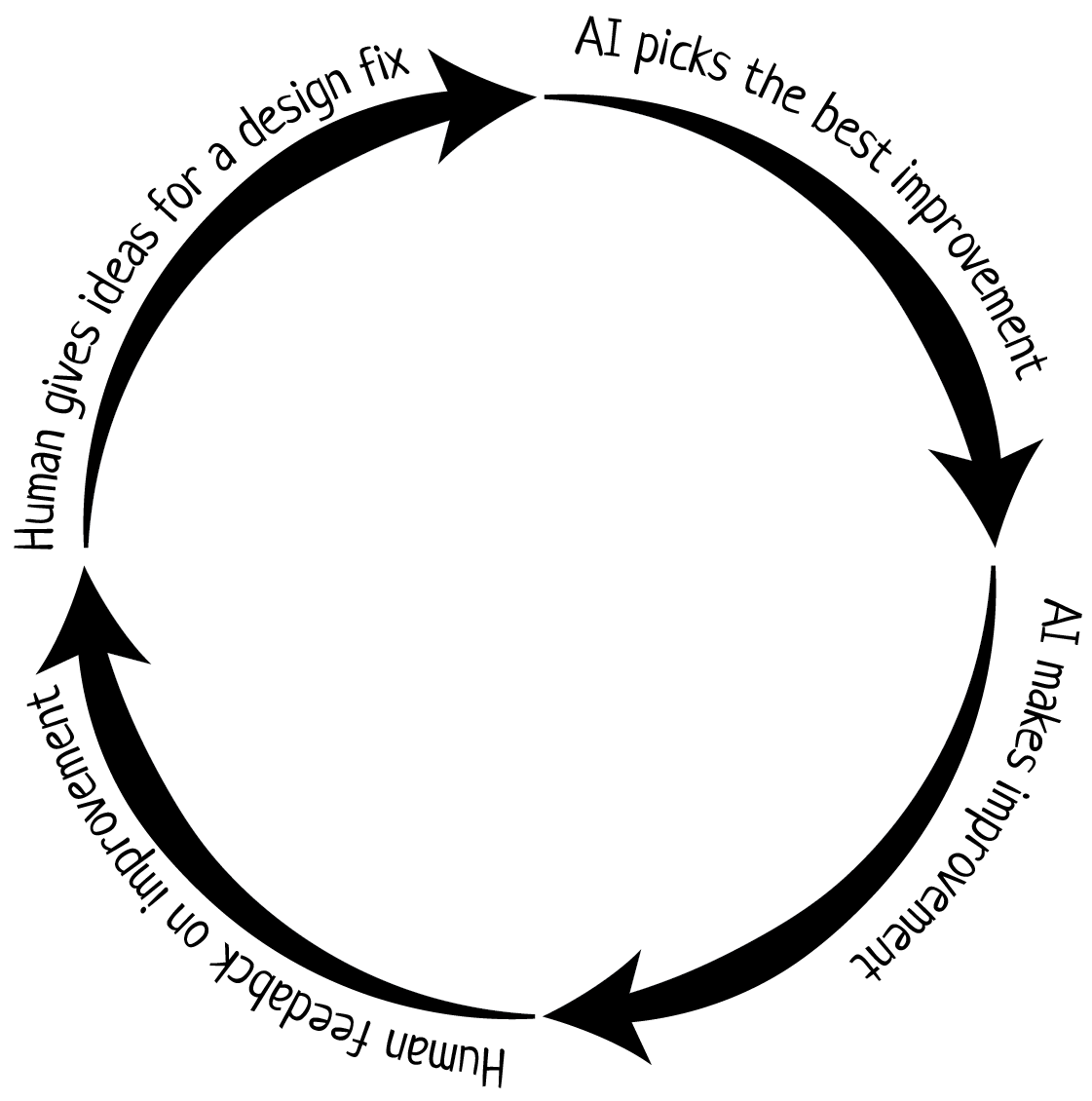

Figure 1: Process followed to produce the games: the human designer primarily tested the game and provided feedback, while the AI selected what (and how) to change in the game code.

While the generation of game content and even complete games [3, 11] has been well-established in academia [15] and practice [16], the now-ubiquitous techno-optimism of data-driven Generative AI or GenAI1 raises timely and critical questions. It is easy to dismiss claims made on creators' blogs [1] and social media about GenAI's ease-of-use in creating game code or assets; similar claims on nearly every type of work (creative or not) abound. It is arguably easy to dismiss academic research in (partial) game generation via GenAI [14, 17] as proof-of-concept without general applications. And yet, AI-generated content is increasingly popular in published games: almost 20% of all games released on Steam in 2025 have disclosed use of "AI Generated Content", eight times as many as in 2024 [8]. The implications of GenAI in game development practice can not be ignored.

This working group identified several questions around the technical feasibility of GenAI-based games, and their implications in terms of ethics, ownership, and (human) creativity. The main questions investigated were:

- How does GenAI handle the creative decision points of game development, and what does this mean for the creativity of a human developer working with it?

- How closely can GenAI replicate an existing game without access to its codebase, and what are the implications for Intellectual Property protection?

- How "in control" does a human designer with a clear idea for the game feel when implementing it via GenAI?

Primarily, the working group took the challenge of creating fully functional games in a day or less, using as little human input as possible. The practical activities revolved around an iterative loop of the human giving ideas for fixes and improvements to the work-in-progress game, the AI picking the best improvement and implementing it in the game, then the human giving feedback on the improvement (see Fig. 1). The process followed is a special case of vibe-coding [5], which is the dominant approach for using LLMs for coding tasks. The practical game development tasks described above were complemented with ad-hoc points of reflection, discussing with the group how the human "creator" was feeling—especially in terms of ownership, creativity, and control over the process and the product [6].

Case 1: GenAI handling [most] creative decisions

For Case 1, we wanted to leave the maximum creative freedom to the AI, focusing only on our subjective comments about the game's playability rather than about the creative decisions. To maximize the end-to-end ideation, we followed a game jam format [7] and generated one theme using an online (non-LLM) theme generator: the resulting theme was "islands". After this, we relied on Anthropic's Claude as our LLM of choice for all concept and code generation phases.

Using the generated theme "islands", we prompted Claude for game ideas for this theme "that will wow the other participants", which returned 6 game ideas. We then asked Claude to rank these ideas on which "will generate the most buzz"; the response ranked Island Genesis first, which we then used for the rest of the game generation pipeline. The original description of Island Genesis in Claude's first response for Case 1 was:

A reverse city-builder where you play as a volcanic island that's slowly sinking. You must strategically grow land masses and guide the last inhabitants to safety before you're completely submerged. Time pressure with emotional storytelling.

Following this description, we followed the iterative cycle of Fig. 1 to produce a game using first the PICO-8 [9] engine and then the Godot [12] game engine.

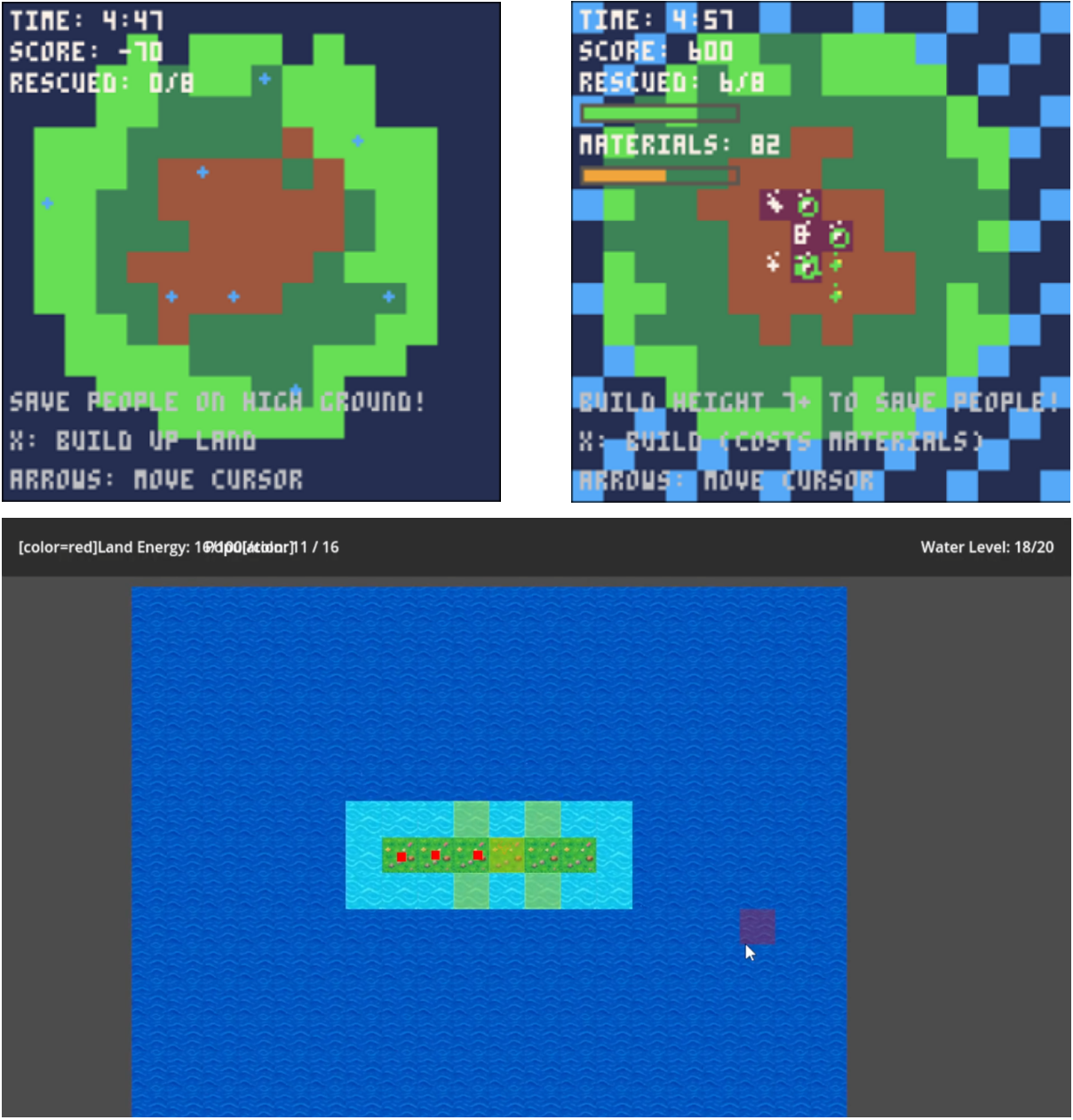

Using the PICO-8 engine, the first LLM response already added the very basics of the game (see Fig. 2; top left): a visualization of an island, the people to be saved, and a basic game state display; the island also already slowly sinks. However, cursor interaction did not work and the game was unplayable. Follow-up iterations involve a human playing the game and producing feedback, with Claude listing possible improvements and then picking the (LLM-perceived) best one to work on. Improvements over ten iterations, lasting around 2 hours, resulted in visual polish (e.g. particle effects, progress bars) and game design additions (e.g. better villager pathfinding, slowly renewing materials used for growing land). The resulting game is playable (see Fig. 2; top right) and, at times, even engaging. The visuals are polished enough for a PICO-8 game.

Figure 2: Screenshots of Island Genesis in PICO-8 (top) and Godot (bottom).

Following the relative success of the PICO-8 Island Genesis, we tried replicating the process (with the same high-level description) with the Godot [12] game engine, which is more complex. Given the same time and effort, the resulting game (see Fig. 2; bottom) is much worse than the PICO-8 game. Likely reasons include the more complex visuals (high-resolution textures) and complex file structures (compared to the single script used in PICO-8). The former increases the expectation for visual quality and increases the challenge of image generation, while the latter poses challenges for the LLM to process (given limited context length) and to create responses for (which takes far more time than for PICO-8). This suggests that a game with a much larger scope—regardless if that scope comes from more complex software or more complex game mechanics—will result in worse results usingonly GenAI planning and programming.

Case 2: GenAI reproducing an existing game

While the other two cases explored the balance of creative decisions between LLM and human co-creators, Case 2 explores how this game development process works when neither human nor LLM have creative agency. All creative decisions are already taken in advance, by someone else: in our case, the creator of an existing game. Another benefit of this approach is that we already have available the description, screenshots, and access to the original game to play and assess intended gameplay when giving feedback to the LLM. This is unlike the other two cases where, to use the creative journey as a metaphor, neither LLM nor human know the destination (instead, they explore together) and it is unclear what constitutes the end of the journey.

Given the success of Claude at generating PICO-8 games in Case 1, we browsed recent high-ranking PICO-8 games on itch.io and chose the game SuperHotRoids to replicate. Among our selection criteria, we considered that the game's recent release would make it less likely that the LLM is trained on information about the actual game. SuperHotRoids mixes up the mechanics of Superhot and Asteroids: a spaceship attempts to shoot down asteroids while time slows when the player does not interact with the game's controls. We will use the title SuperHotRoids to refer to our own GenAI-made game in Case 2, since we try to replicate it in full.

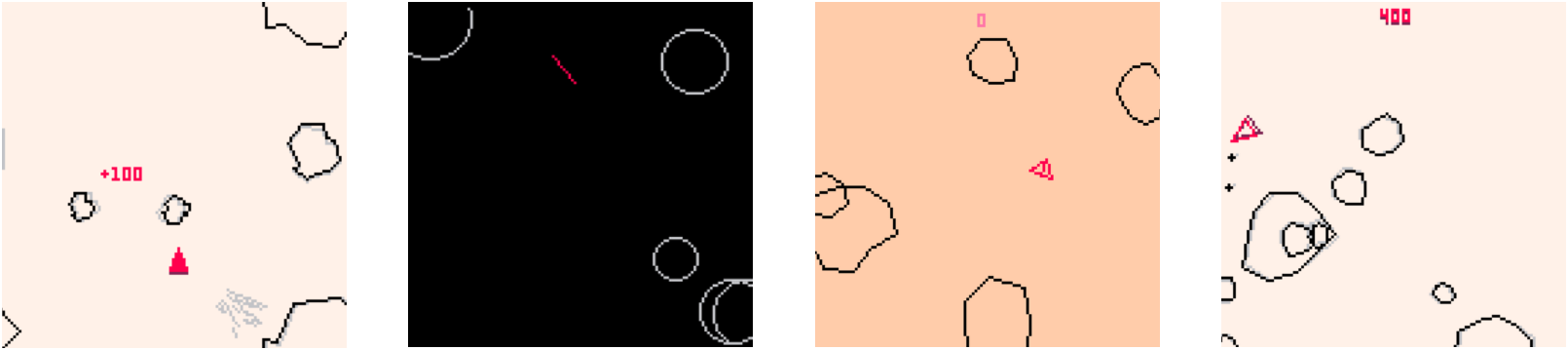

Given an initial description of the game mechanics and screenshots from the original game, we prompted OpenAI's GPT-4o to reproduce the game. The returned code was entered into PICO-8, and feedback was given to the LLM. Such feedback contained information on missing mechanics, misrepresented graphics, balancing constraints, or errors in the returned code. Over the course of 30 queries, the basic game loop, as well as a main menu and a high-score screen, had been replicated. We show snapshots of the process in Fig. 3. The overall process took about 3 hours. While the final product looks similar to the original game, it still needs polish in balancing and mechanics.

Figure 3: The original SuperHotRoids (far left) and checkpoints of the reproduction process at 1, 5 and 30 queries.

This case raises several ethical considerations regarding the reproduction of an existing game. We doubt that the LLM had prior access to the SuperHotRoids game code; the reproduction was based solely on textual descriptions and screenshots provided by us. However, the resulting game is very much a derivative of the original. We therefore refrain from publishing the resulting game so as not to infringe on the game developers' copyright of SuperHotRoids. While this experiment demonstrates technical feasibility, similar methods could be misused to clone or imitate original works without consent, thus undermining the value of human creative labor.

Case 3: GenAI following a human creator

For Case 3, we followed a more traditional pipeline for co-creative tools: a human creator taking the initiative and the AI following [10]. We consider Case 3 a closer approximation of how GenAI will be used by indie developers, novices and students [4]. Specifically, the game was produced with entirely human game design decisions, and entirely AI-authored code. Using this process, we created a simple camera-based game (named Webcam Island Builder by the human author) connected to the "islands" theme of Case 1. The LLM of choice was Google's Gemini 2.5 Flash via Cursor, and the game engine was Pygame (with Pymunk for physics).

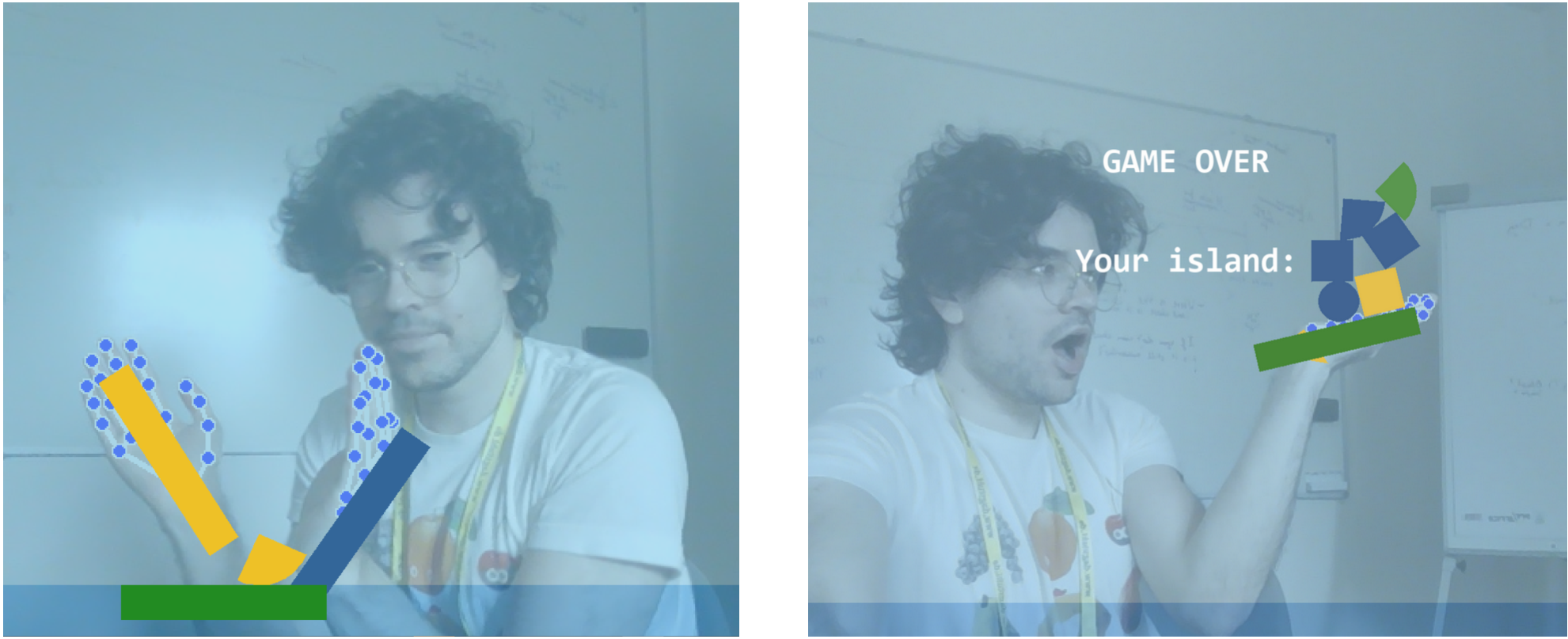

The game concept revolves around webcam tracking: real-time footage of the player is used to physically interact with the game world. The player uses their hands, which act as rigid bodies in the game's physics, to guide randomly selected shapes onto a moving platform before time runs out. At the end, the player sees the "island" they created (see Fig. 4). The application is more of a toy, with no scoring or losing conditions.

This high-level concept was decided before engaging at all with GenAI systems. While first prompts were exploratory (e.g. to list appropriate libraries for game physics and webcam tracking), subsequent prompts were much more specific (e.g. "implement a timer that ends the game after 60 seconds"). A point was made to not allow creative decisions or design changes from the LLM. All prompts were designed to limit the LLMs' contributions to purely implementing clearly outlined functions.

Figure 4: An in-progress (left) and end-game (right) screenshot of Webcam Island Builder.

The game was functional within an hour of this process, and only minor adjustments were needed after this. Overall, the game (while simple in terms of game logic) does what the designer expected and thus satisfies the—human—brief. Micro-adjustments were made after testing the game, on a minor scale (e.g. move speed of platforms), but they always followed the human designer's intuition and preferences.

Conclusions

Abiding by the intents of this working group, four games were created within a day2, all with some degree of playability. We explored how three different LLMs handle game development tasks, and used three different game engines to make the games.

In terms of technical feasibility, PICO-8 seemed better suited for the LLM of choice (Claude) to design for, compared to Godot. We hypothesize on the reasons for this disparity in Case 1. More broadly, however, we can argue that lower-fidelity games with limited assets (graphics, audio, logic, interface, narrative) are easier for GenAI to produce. Such types of games are most often generated by a single indie developer over the course of a couple of days. This could suggest that GenAI can assist novices (who do not have a team or technical skills) to make a game faster, during a game jam. On the other hand, such indie developers are most vulnerable to GenAI misuse as "simple" games such as SuperHotRoids (see Case 2) can be easily reproduced causing Intellectual Property theft.

In terms of perceived creator agency, it was observed that engaging even superficially with the work-in-progress game and suggesting feedback to the LLM did increase the sense of ownership on the part of the human co-creator. However, we hypothesize that this sense of ownership is not how a game developer feels about their game; rather, it's closer to how a Quality Assurance tester (or fan) whose feedback has been heard feels about a product that is ultimately not theirs. Ethical issues of creativity, authorship [13], and intellectual property (especially regarding Case 2) remain critical in this new era of GenAI. Moreover, it was surprising to find out that games made in a day seemed to us3 to be "good enough". It is as exciting as it is worrying to speculate on what "good enough" will be perceived as in a year or a decade from now, and whether this shift will be due to a leap in Artificial Intelligence or due to a stagnation in human skill, appreciation and imagination [2].

1: We primarily consider GenAI to cover Large Language Models (LLMs) and Text-To-Image Models trained on massive amounts of data, often as black-box models owned by corporations.

2: Technically, each game took a couple of hours to make.

3: We chose purposefully game development tools that we were unskilled in.

References

[1] Bartek Bogacki. Building a working game in an hour: My experience with vibe coding, 2025. Accessed August 2025.

[2] Simon Colton. Creativity versus the perception of creativity in computational systems. In Proceedings of the AAAI Symposium on Creative Intelligent Systems, 2008.

[3] Michael Cook, Simon Colton, and Jeremy Gow. The ANGELINA videogame design system—Part I. IEEE Transactions on Games, 9(2):192–203, 2016.

[4] Daniel Cox, John Murray, and Anastasia Salter. Routine, twisty, and queer: Pasts and futures of games programming pedagogy with no and low code tools. In Proceedings of the 20th International Conference on the Foundations of Digital Games, 2025.

[5] Benj Edwards. Will the future of software development run on vibes?, 2025. Accessed 21 Sep 2025.

[6] Anna Jordanous. Four PPPPerspectives on computational creativity. In Proceedings of the Second International Symposium on Computational Creativity, 2015.

[7] Anakaisa Kultima. Defining game jam. In Proceedings of the Foundations of Digital Games Conference, 2015.

[8] Ichiro Lambe. The new surprising number of Steam games that use GenAI, 2025. Accessed August 2025.

[9] Lexaloffle Games. PICO-8: A fantasy console, 2023. Accessed August 2025.

[10] Antonios Liapis, Gillian Smith, and Noor Shaker. Mixed-initiative content creation. In Noor Shaker, Julian Togelius, and Mark J. Nelson, editors, Procedural Content Generation in Games: A Textbook and an Overview of Current Research, pages 195–214. Springer, 2016.

[11] Antonios Liapis, Georgios N. Yannakakis, Mark J. Nelson, Mike Preuss, and Rafael Bidarra. Orchestrating game generation. IEEE Transactions on Games, 11(1):48–68, 2019.

[12] Juan Linietsky, Ariel Manzur, and the Godot community. Godot game engine, 2014. Accessed August 2025.

[13] Jon McCormack, Toby Gifford, and Patrick Hutchings. Autonomy, authenticity, authorship and intention in computer generated art. In Proceedings of the AAAI Symposium on Creative Intelligent Systems, 2019.

This article has been published at the Dagstuhl Seminar 25292 "New Frontiers in AI for Game Design ". The original publication, along with its bibtex entry and other information will be available soon.